Comparing AI coding assistants: Aider, Cursor, and Windsurf tested in a one-prompt challenge

In this tutorial, we dive deep into the capabilities of three popular AI coding assistants—Aider, Cursor, and Windsurf—to understand how efficiently they can create a retrieval-augmented generation (RAG) application using just a single, detailed prompt. By conducting this experiment, we aim to evaluate the tools' strengths, real-world applicability, and the role of AI-powered coding assistants in shaping modern software development workflows.

Introduction to the AI coding assistant experiment

This challenge is not an ordinary app-building exercise; it pushes these tools to manage modern RAG app complexities using only one concise prompt, while adhering to modularity requirements and specific constraints, and accounts for the nuances of recent LangChain updates. Let’s explore how Aider, Cursor, and Windsurf handled this task.

What is retrieval-augmented generation (RAG), and why this test?

RAG refers to combining retrieved information (e.g., from a database or file storage) with a large language model’s reasoning to intelligently answer user queries. It requires robust backend engineering to implement seamless interactions between retrievers, vector stores, and large language models. Although RAG apps possess simple user interfaces (UI), the behind-the-scenes operations present a challenging test for AI coding assistants due to the need for modular design, accurate dependency setups, and methodical debugging.

We chose this test to evaluate the assistants on a use case that aligns with AI-driven transformations in software workflows. While the app is smaller in scope compared to a large production system, its nuances create hurdles that only skilled developers—or smart assistants—can handle competently.

The experiment setup and methodology

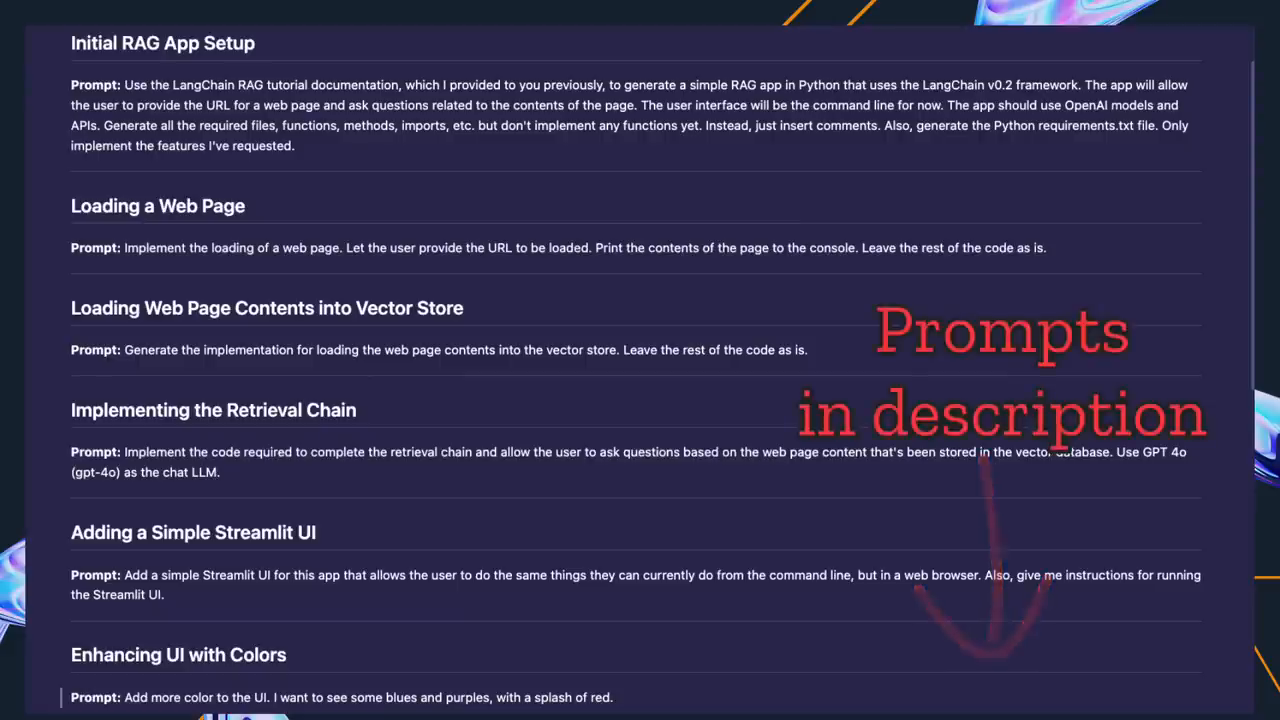

The process followed for each tool was identical, ensuring fairness in evaluation:

- A single, detailed prompt combining requirements was prepared with precise specifications, such as modular file structures and technology stack constraints.

- The latest LangChain documentation for creating RAG applications was supplied as a reference for each tool.

- Each AI tool was tasked to create a fully functional app by interpreting the prompt and referencing the documentation as needed.

- Errors were monitored, and minimal guidance was provided to see how effectively the assistants adapted to iterative fixes.

Aider: the guided coder

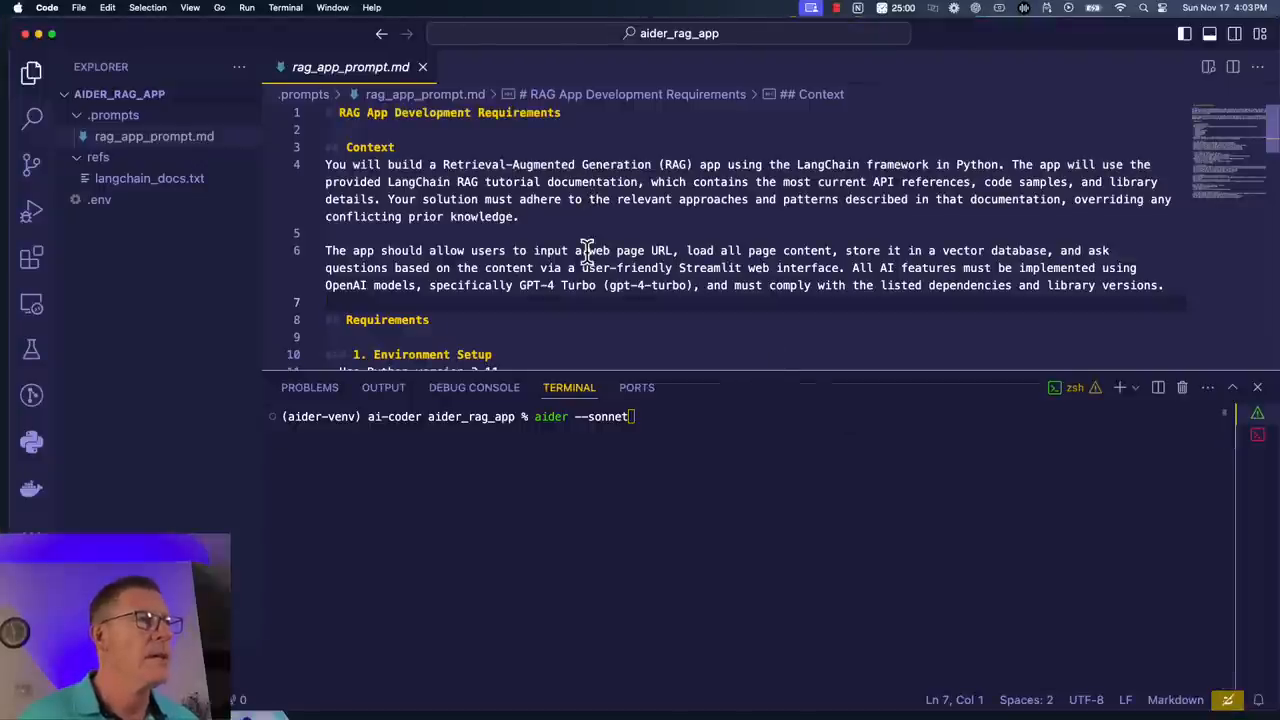

Initial setup and execution

Aider started strong. Within a pre-created folder structure in VS Code containing the prompt and LangChain documentation, Aider quickly generated all essential components: requirements.txt, app.py, and additional necessary files like document_loader.py, vector_store.py, and rag_chain.py.

Aider generating required files

The AI also modularized its output as requested, with:

- Document Loader: Handles web-based content extraction.

- Vector Store: Manages embeddings within a local ChromaDB database.

- RAG Chain: Facilitates LLM-powered Q&A.

- Streamlit app.py: Processes user input via a simple UI interface.

Handling constraints and debugging

When errors arose (such as database management issues or missing parameter handling), Aider showcased its ability to address them using detailed screenshots of errors provided by the user. A key strength of Aider lies in its ability to not only debug code but also clarify its solutions in language that’s accessible to any developer.

For instance, it identified problems with persistent attributes of database configurations altered by newly-revised APIs and implemented fixes efficiently.

Limitations

Occasionally, Aider’s initial output required additional fine-tuning that demanded users to execute commands outside the tool, such as setting up virtual environments and manually handling specific dependencies like updates to ChromaDB.

Cursor: a competitive contender

Cursor's Composer mode was up next, and it followed a familiar pattern. Using the single prompt and LangChain documentation, Cursor read and analyzed the files to generate modular outputs similar to Aider.

Cursor's modular app generation explained

Like Aider, Cursor focused on creating modular files:

- Loader for input handling.

- Vector database for embedding storage via ChromaDB.

- Chains integrating LangChain and LLModels.

Debugging interactions

Errors involving Beautiful Soup’s parser parameters highlighted Cursor’s abilities to adjust its code seamlessly with minimal inputs from the user. Users could provide detailed screenshots for debugging, and Cursor swiftly rewrote the affected sections while maintaining its structure.

It also offered valuable suggestions on packaging, ensuring users could make pip-install-ready environments with minimal hassle.

Challenges identified

Cursor sometimes oversimplified certain configurations, making assumptions about parameter defaults that clashed with LangChain's latest updates. Notably, it accidentally overwrote crucial files with dummy keys (e.g., OpenAI’s .env keys), creating minor inconveniences that a developer would need to address manually.

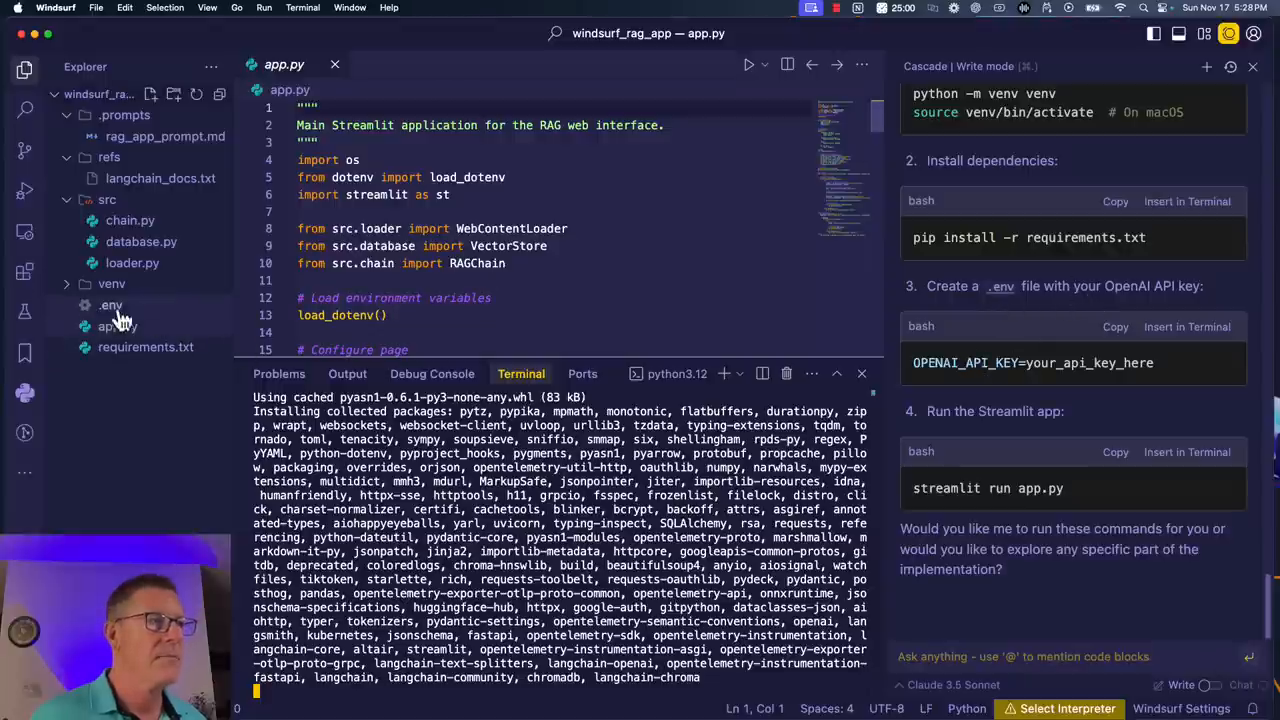

Windsurf: the new player in AI coding

Finally, Windsurf stepped up to tackle the challenge. While newer compared to Cursor or Aider, this tool brought unique features to the table. It began similarly by loading LangChain documentation and generating modular components efficiently.

Windsurf initializing the RAG app

However, Windsurf’s documentation integration and ability to contextualize complex commands felt marginally smoother, especially in generating concise summaries for user-defined constraints.

Debugging approach

Windsurf required fewer manual screenshots than its competitors, suggesting better context retention and self-correction mechanisms. Yet, like the others, it struggled with similar persistency attribute bugs in ChromaDB codebases—likely a shortcoming stemming from underlying AI model training data rather than the tool itself.

One downside noted during testing was the lack of inline debugging UI features found in Cursor or Aider. Windsurf instead required users to copy errors and messages manually into its interface, making iteration less fluid.

Comparison: strengths and shortcomings

Strengths

- Aider: Best debugging capabilities and modular setups, with precise suggestions during fixes.

- Cursor: Fast and responsive code generation, with versatile tools for refactoring and dependency handling.

- Windsurf: Great contextual analysis, fewer AI intervention cycles, and best for concise modular generation tasks.

Debugging challenges across tools

Weaknesses

- Aider: Over-reliance on manual terminal inputs reduced convenience slightly.

- Cursor: Prone to overwriting .env or sensitive configuration details; struggles with newer API parameterizations.

- Windsurf: Lacks sophisticated debugging UIs that competitors offer and requires manual text-based error interventions.

Final verdict and what we learned

While none was perfect, the progress of AI coding assistants is undeniable. Tasks that require weeks of developer effort can be significantly expedited using these tools, provided users remain adaptable to small iterative fixes. Between the assistants, choice boils down to user preferences (e.g., hands-off debugging vs. contextual analysis depth).

Final comparison of the completed RAG apps

Each assistant succeeded in creating a RAG app that integrated modular structures like Streamlit UIs, document loaders, and vector stores. While no tool stood out as "the best," the high adaptability of AI-supported coding is a clear winner for developers today.

Conclusion: crafting the future with AI-assisted coding

AI coding assistants like Aider, Cursor, and Windsurf no longer feel like novelties; they are becoming essential tools in software development pipelines. These experiments demonstrate their transformational impact: faster app prototyping, real-time debugging, and modular abstractions—all with minimal input.

If you're excited to explore more or share insights about coding with AI, consider joining the Skool Community to discuss, exchange ideas, and learn! Together, let’s code the future—with AI at the helm.