# Goodbye AI box: clustering MacBooks for LLMs using Thunderbolt and NAS

In this comprehensive walkthrough, the content creator bids farewell to the traditional AI hardware box that is "loud, big, and hot," replacing it with a cutting-edge setup utilizing four MacBooks, a high-speed NAS (Network Attached Storage), and a Thunderbolt bridge. Terms like LLMs (Large Language Models), SSD-only NAS, clustering workflows, and open-source clustering tools (specifically EXO) take center stage as we delve deep into a highly efficient, mechanically silent, and scalable development setup.

[](https://www.youtube.com/watch?v=uuRkRmM9XMc&t=0s)

[*This marks the beginning of swapping bulky hardware with a light, high-performance MacBook cluster!*](https://www.youtube.com/watch?v=uuRkRmM9XMc&t=0s)

## replacing the outdated "AI box"

The presenter starts by outlining the drawbacks of relying solely on the “AI box.” This behemoth hardware, previously used for running LLM workflows, consumes an enormous amount of space and power while generating considerable heat and noise. Recognizing these inefficiencies, the content creator introduces an alternative—clustering four lightweight MacBooks and using a shared SSD-only NAS for LLM workflows. This pivot promises impressive speed, energy efficiency, and space-saving benefits without compromising on performance.

## components of the new setup

At the heart of this experiment is an SSD-only NAS and a Thunderbolt bridge, paired with MacBooks featuring varied configurations. The essential components are discussed below.

### The NAS (network attached storage)

The core storage hardware in this ecosystem is a brand-new SSD-only NAS by TerraMaster. Unlike traditional DAS (Direct Attached Storage), which connects directly to a single machine, NAS is network-based and enables multiple devices to access shared storage simultaneously. This feature is critical for clustering multiple machines because:

1. **Reduced data redundancy**: Storing models centrally on the NAS eliminates the need to download bulky LLMs separately on each machine.

2. **Speed and silence**: Being SSD-based, the NAS is both exceptionally fast and practically silent.

3. **Scalability**: The TerraMaster NAS includes a 10 Gigabit NIC (Network Interface Card). While the setup uses only 2.5 Gbps due to network limitations, this still ensures swift data transfer speeds for clustering tasks.

The presenter also notes an exciting possibility: using the NAS as a media server in the future due to its capabilities.

### The Thunderbolt bridge

Transitioning from WiFi (used previously) to a wired Thunderbolt bridge is another significant improvement. Featuring remarkably stable connections, this new approach harnesses the power of Thunderbolt ports on the MacBooks to connect the systems directly without relying on slower WiFi networks. Each MacBook is manually assigned an IP address for clean, streamlined communication.

To clarify:

- The main MacBook Pro acts as a hub, connecting with Thunderbolt cables to up to three other MacBooks.

- Manual IP configuration ensures stability and faster performance when transferring data or distributing LLM processing workloads across machines.

[](https://www.youtube.com/watch?v=uuRkRmM9XMc&t=55s)

[*Manually assigning IP addresses to ensure seamless Thunderbolt connections between MacBooks.*](https://www.youtube.com/watch?v=uuRkRmM9XMc&t=55s)

---

## open-source tool: "EXO" for clustering

Clustering the MacBooks to distribute heavy LLM tasks is accomplished using the **EXO** tool by Exo Labs. The presenter describes EXO as an efficient, user-friendly open-source framework designed for dividing massive workloads between multiple devices.

**Hardware stack for clustering**:

- **MacBook Pro 16**: Main driver with 64GB RAM.

- **MacBook Airs**: M1, M2, and M3 variants, each equipped with 8GB of RAM.

EXO's advantage lies not just in clustering but in intelligent resource allocation. RAM-heavy models are split among all systems by leveraging the unique memory footprint of each MacBook. This ensures efficient distribution, even across memory-limited devices like the 8GB MacBook Airs.

Key Improvements:

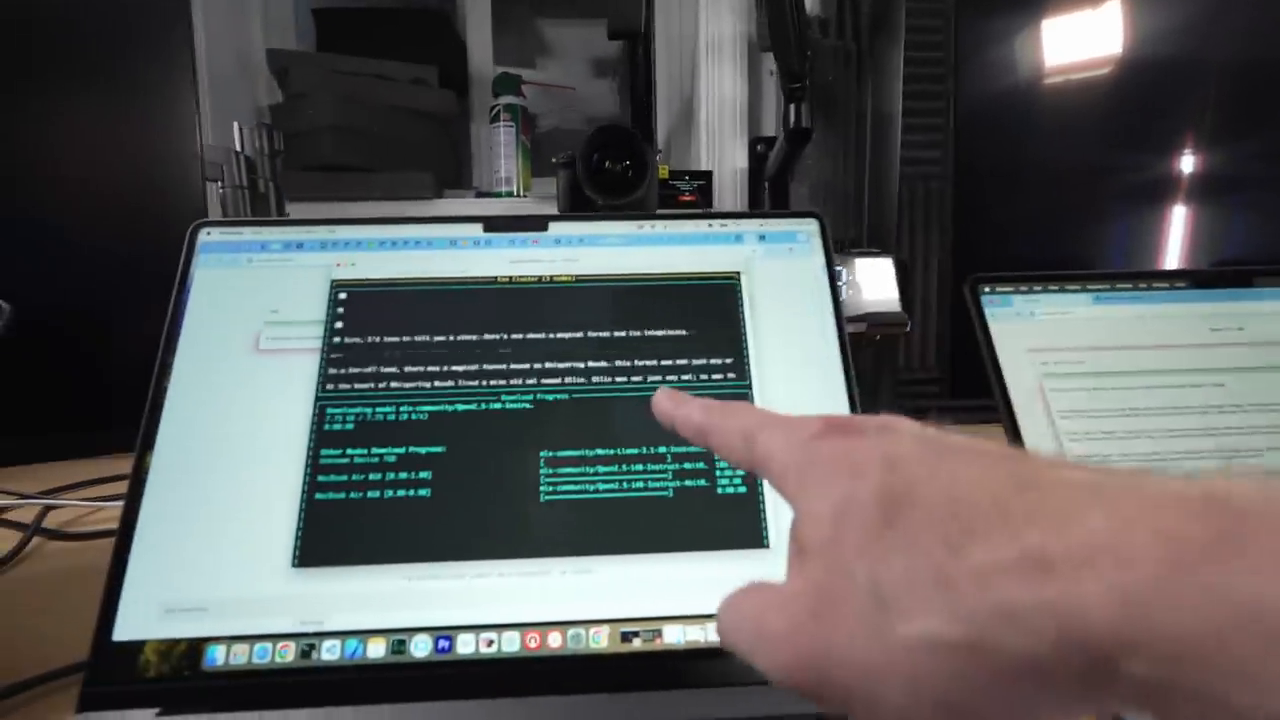

- In earlier experiments (with only three devices), models had to be downloaded locally on each computer. However, by integrating the shared NAS, redundancy is eliminated as all systems pull the models from a centralized location.

- Additionally, setting custom environment variables such as `HF_HOME` allows EXO to automatically reference NAS-stored models when initializing.

The process for NAS integration involves:

1. Mapping SMB folders from the NAS, with specific directories for models.

2. Setting `HF_HOME` as an environment variable using the folder paths (e.g., `/Volumes/AlexModels/HuggingFace/`).

3. Restarting the terminal post-configuration to allow EXO access to this shared storage path.

[](https://www.youtube.com/watch?v=uuRkRmM9XMc&t=200s)

[*Configuring EXO to seamlessly pull large LLM models directly from the NAS.*](https://www.youtube.com/watch?v=uuRkRmM9XMc&t=200s)

---

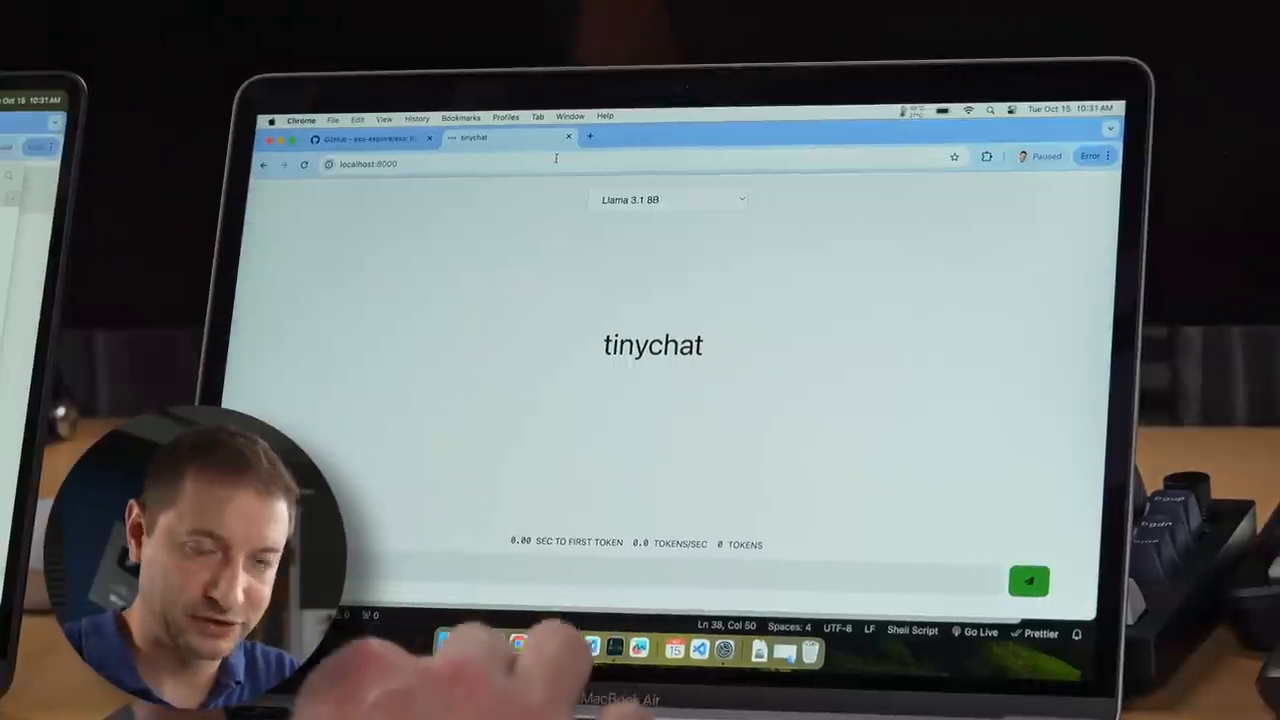

## testing: LLM performance across nodes

Once the setup is complete, the presenter tests the clustering efficiency by working directly with models of varying scales. The steps include:

1. **Initializing a single MacBook**: The initial EXO setup runs on only one node. The system is tested with smaller models such as LLaMA 3’s 1 billion parameter variant, which operates locally on this single machine.

2. **Expanding the cluster**: Additional MacBooks are connected and detected by EXO, extending the cluster to three, and eventually four, nodes.

3. **Handling large models**: Running resource-intensive models such as Quen-2.5B with 14 billion parameters demonstrates how effectively EXO distributes the load among machines. Despite MacBook Airs having limited memory, EXO ensures the workload remains manageable due to shared RAM.

Observations:

- Smaller models (e.g., 1B parameters) are processed faster (up to 40 tokens/second).

- However, processing larger models requires distributing the workload across all four systems to overcome memory limitations in individual nodes.

- EXO intelligently uses the MacBook Pro’s 64GB RAM for heavier lifting, ensuring MacBook Airs don’t exceed their 8GB memory limits.

[](https://www.youtube.com/watch?v=uuRkRmM9XMc&t=480s)

[*EXO seamlessly detects and integrates all four MacBooks into a single functioning cluster.*](https://www.youtube.com/watch?v=uuRkRmM9XMc&t=480s)

---

## additional setup challenges

Setting up a multi-device environment inevitably introduces challenges. One such issue encountered was a permissions error, particularly related to accessing the NAS folder across four Macs simultaneously. The problem was quickly resolved, allowing even the most resource-intensive Quen 14B model to execute seamlessly across the cluster.

### Interesting benchmarks:

- The throughput (tokens/second) varies dramatically based on the machine:

- M1 MacBook Air: 23 tokens/sec.

- M2 MacBook Air: 18 tokens/sec.

- M3 MacBook Air: 30 tokens/sec.

- M2 Max MacBook Pro: 48 tokens/sec.

These results underscore the efficiency of EXO in leveraging the specific abilities of each device to work harmoniously.

---

## final thoughts: a compact, scalable solution

This experiment proves that clustering MacBooks with the help of NAS and the EXO tool is a viable, efficient replacement for bulky AI hardware setups. The NAS not only centralizes model storage but also significantly reduces data duplication, while the Thunderbolt bridge ensures speedy and stable communication between machines.

### Takeaways:

- The **SSD-only NAS**, though not the cheapest, is worth the investment for those requiring quiet, fast, and centralized storage solutions.

- EXO simplifies the task of running large-scale LLMs on clusters, especially when combined with good hardware and shared storage.

Overall, the new setup opens exciting doors for developers who may lack access to GPU-heavy servers but want to experiment with AI workflows using lightweight laptops.

---

Looking for more experiments? Check out the creator's previous experiments with AI setups and further exploration of EXO!

[](https://www.youtube.com/watch?v=uuRkRmM9XMc&t=720s)

[*Be sure to check the innovation in distributed machine learning by EXO!*](https://www.youtube.com/watch?v=uuRkRmM9XMc&t=720s)