Apache Spark: A revolutionary tool for big data analytics and machine learning

In a world where data is the new oil, processing and understanding vast amounts of information is a cornerstone of technological advancement. Apache Spark has emerged as one of the most robust and powerful tools to handle big data efficiently. This open-source data analytics engine has revolutionized the way organizations process and analyze massive streams of data. Let’s delve deeper into the concepts of Apache Spark, explore its history, compare it with other technologies, and understand how it handles complex data tasks like a pro.

What is Apache Spark?

Apache Spark is a cutting-edge data analytics engine.

Apache Spark is an open-source data analytics engine designed to process massive data streams from multiple sources in real-time or batch mode. Spark's ability to juggle such complex computations resembles something like “an octopus juggling chainsaws.” It specializes in distributed data processing and achieves superior speed by performing computations in memory, which makes it up to 100 times faster than traditional disk-based systems.

The origins of Apache Spark

Apache Spark was created at UC Berkeley's AMP Lab.

Apache Spark originated in 2009, thanks to Matei Zaharia at the UC Berkeley AMP Lab. During this time, data generation on the internet was witnessing explosive growth, moving from megabytes to petabytes. It became nearly impossible to perform analytics on this volume of data using traditional single machines. This growing need sparked the development of Apache Spark, providing researchers and enterprises with a tool to process massive datasets across distributed systems.

Apache Spark vs Hadoop: The battle of big data tools

Apache Spark introduced in-memory data processing compared to Hadoop's disk-based workflows.

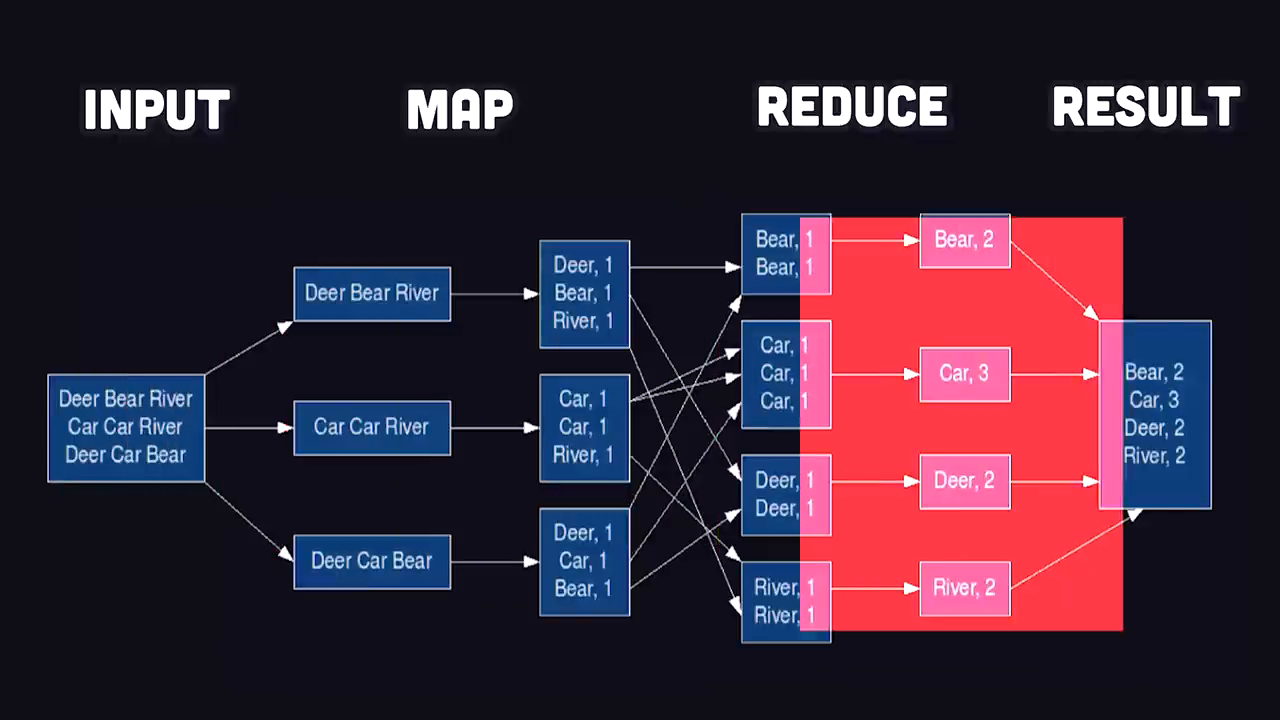

Before Apache Spark, Hadoop's MapReduce was the dominant player in big data analytics. MapReduce employs a programming model where data is mapped into key-value pairs, shuffled, sorted, and reduced into final results. While efficient in handling distributed datasets, MapReduce faced bottlenecks due to its reliance on disk-based input/output (I/O) operations.

Apache Spark changed the game by introducing an in-memory data processing approach. Instead of reading and writing intermediate results to disk, Spark processes data in RAM, significantly accelerating the computation. This innovation eliminated the I/O bottleneck, making Spark up to 100 times faster than its competitors.

Real-world use cases of Apache Spark

Apache Spark powers data analytics for Fortune 500 companies, NASA, and more.

Apache Spark powers some of the world's largest organizations across various industries:

- Amazon: Spark helps analyze e-commerce data, transforming the customer experience with targeted recommendations and predictions.

- NASA’s Jet Propulsion Lab: It utilizes Spark to process massive amounts of space data, exploring the depths of the universe.

- 80% of Fortune 500 companies: Spark has become the go-to solution for enterprises handling complex big data pipelines and analytics needs.

Despite its reputation as a distributed computing tool, Spark can also run locally, making it accessible for small-scale projects and individual developers.

How Apache Spark works

Getting started with Apache Spark

Apache Spark allows local and distributed processing via easy APIs.

Apache Spark is primarily written in Java and runs on the Java Virtual Machine (JVM). However, its APIs support multiple languages like Python (via PySpark), SQL, and Scala, making Spark accessible to a broad developer base. To get started, you need to install Spark and initialize a session.

Solving real-world problems with Spark

Spark DataFrame API enables intuitive real-world data filtering.

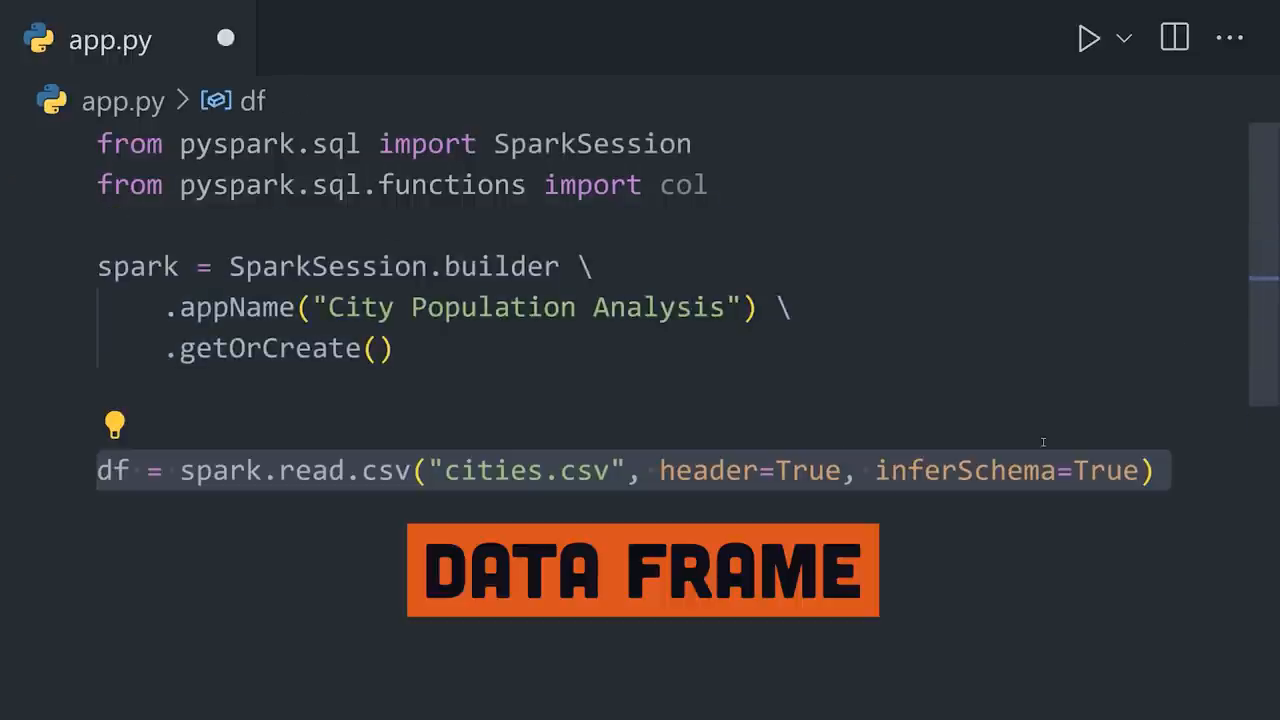

Imagine you have a CSV file with data on cities, including population, latitude, and longitude. Your task is to find the most populous city located between the tropics. Here's how Spark handles this step-by-step:

- Load the data into memory: Spark reads the CSV file and stores it as a data frame, transforming rows and columns into objects that can be processed in memory across distributed systems.

- Filter the data: Exclude cities outside the tropics using Spark's powerful transformation functions.

- Order and select results: Sort the filtered results by population and retrieve the city with the highest population. Spark makes this entire process seamless through method chaining with its DataFrame API.

In this example, Spark identifies Mexico City as the city with the largest tropical population.

Spark with SQL and distributed clusters

Spark integrates with SQL databases and supports clustered scaling.

For those working with SQL databases, Spark provides easy integration to query data directly, enhancing its usability for database engineers. Additionally, when handling massive datasets, Spark’s cluster manager or container orchestration tools like Kubernetes can scale workloads horizontally across countless machines, achieving distributed processing at its finest.

Apache Spark for machine learning at scale

Spark's MLlib simplifies large-scale machine learning.

Apache Spark also shines in machine learning through its powerful library known as MLlib. By distributing data across clusters, Spark builds scalable machine learning pipelines effortlessly. For instance:

- Vector assembler: MLlib allows developers to combine multiple columns into a single vector column.

- Data splitting: Split data into training and testing sets for machine learning models.

- Algorithm selection: MLlib supports algorithms for classification, regression, clustering, and more.

This distributed training ensures that models can leverage Spark’s mass-scale data handling capabilities to make accurate predictions.

Education and tools to get started with Spark

Skills in programming and analytics can unlock Spark's full potential.

While Spark is a robust tool, harnessing its full potential requires a strong foundation in programming, data analysis, and problem-solving strategies. Platforms such as Brilliant.org can help individuals build these critical skills using immersive lessons and hands-on exercises. Investing time to improve logical thinking and programming habits can significantly enhance your proficiency with tools like Apache Spark.

Conclusion

Apache Spark unlocks the potential of scalable big data analytics.

Apache Spark continues to transform big data analytics with its in-memory computation, scalability, and integration with machine learning technologies. It has emerged as a cornerstone for fields ranging from academia and research to industrial enterprises. Whether you’re analyzing e-commerce trends, processing deep space data, or training ML models at scale, Spark delivers unmatched performance and efficiency.

As we stand on the shoulders of revolutionary tools like Apache Spark, it is essential to keep learning and developing our skills to adapt to the ever-changing landscape of technology. So, why not take the first step into the world of Spark and big data today?