Have You Been Using Reasoning Models Correctly?

OpenAI has released new documentation called Reasoning Best Practices, which provides guidance on how to effectively use reasoning models, not just OpenAI's, but also others like Gemini and DeepSeek. The documentation outlines key differences between standard AI models and reasoning models and provides insights into how to prompt them effectively for the best results.

Introduction to Reasoning Models

Introduction to the correct usage of reasoning models, as explained in the video

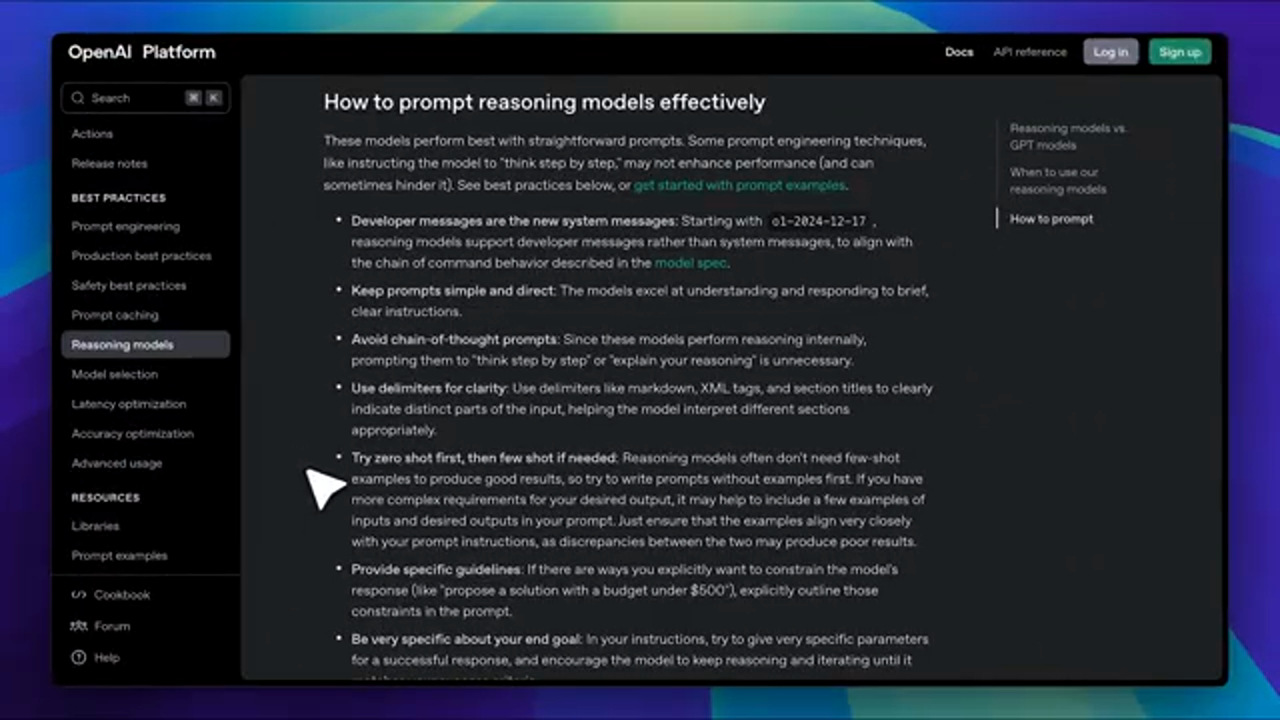

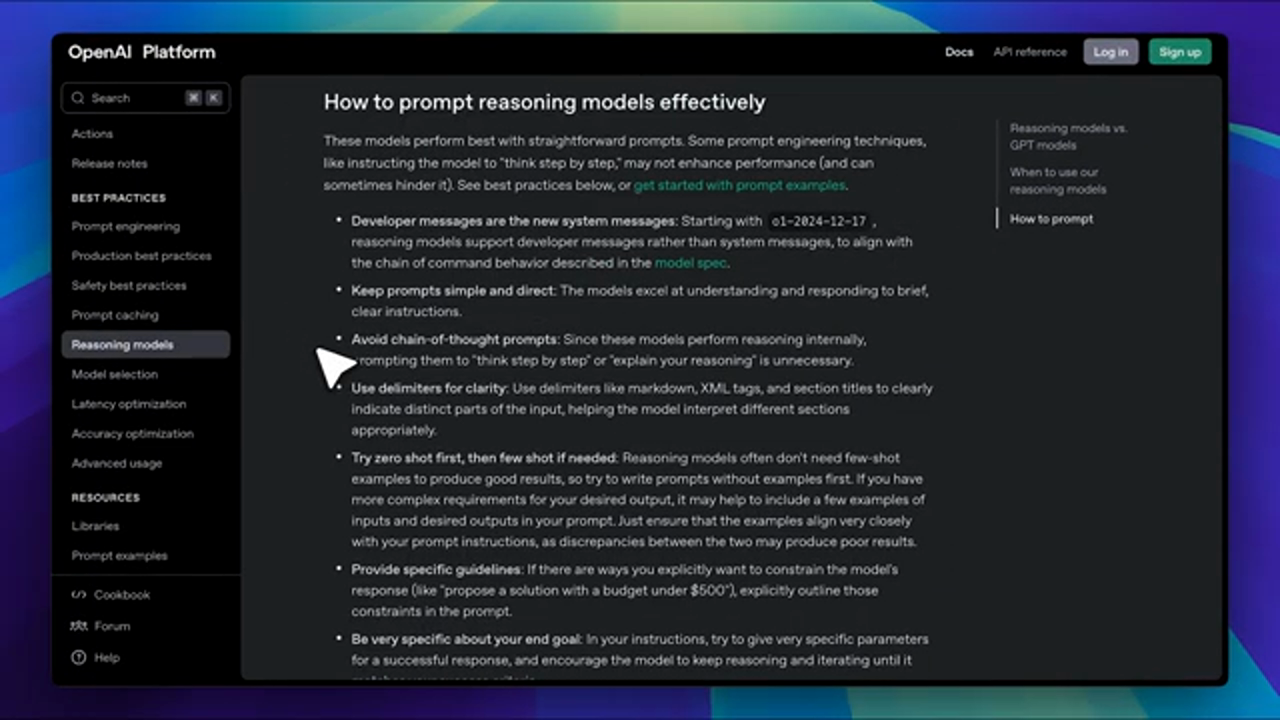

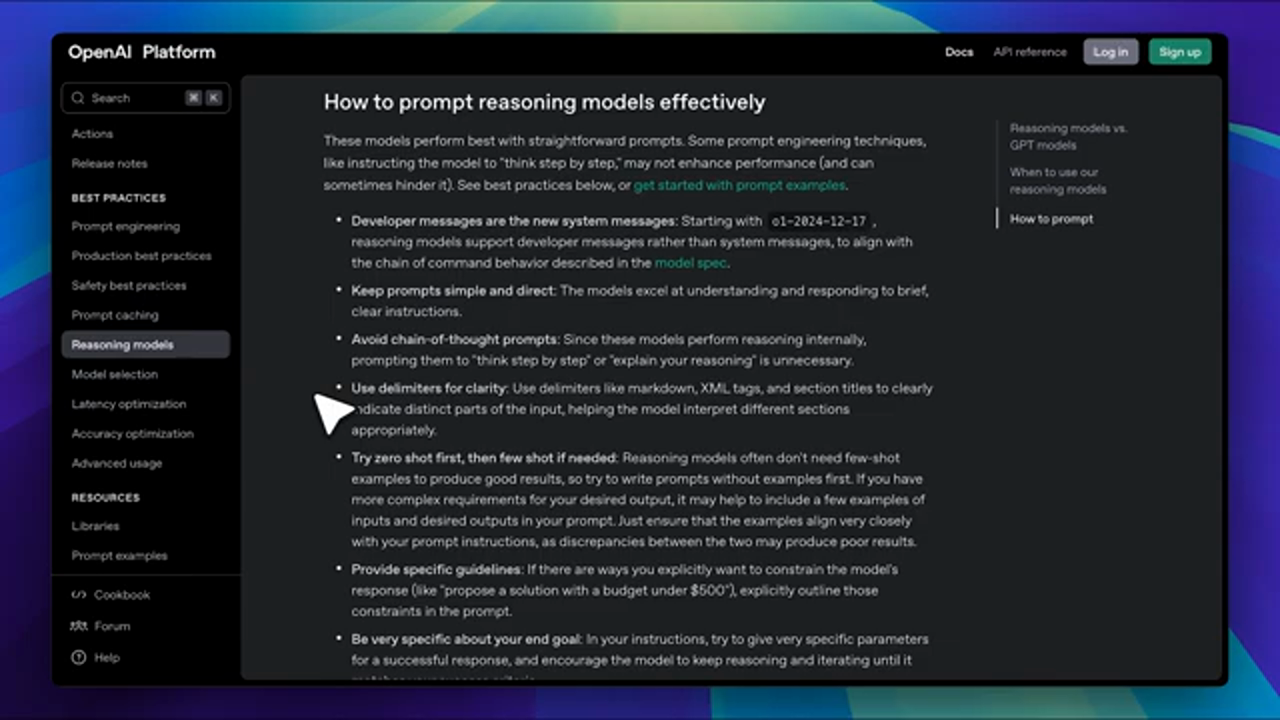

The first tip from OpenAI is to keep the prompt simple and to the point. No extra fluff, no unnecessary words, just clear, direct instructions. This might seem obvious, but many people still overcomplicate things.

Introduction to the correct usage of reasoning models, as explained in the video

The first tip from OpenAI is to keep the prompt simple and to the point. No extra fluff, no unnecessary words, just clear, direct instructions. This might seem obvious, but many people still overcomplicate things.

Simplifying Prompts for Reasoning Models

The importance of simplifying prompts for better results from reasoning models

Next, OpenAI advises against adding "Chain of Thought" prompts when using these reasoning models. This refers to asking the models to think step by step or explain their reasoning, since they are already reasoning models, there's no need to do that.

The importance of simplifying prompts for better results from reasoning models

Next, OpenAI advises against adding "Chain of Thought" prompts when using these reasoning models. This refers to asking the models to think step by step or explain their reasoning, since they are already reasoning models, there's no need to do that.

Avoiding "Chain of Thought" Prompts

Why "Chain of Thought" prompts are unnecessary for reasoning models

Another important tip for these reasoning models is using delimiters, which are essentially separators for your text. These can include markdown, XML tags, or even section titles. An example of this is shown, where you could provide your context at the top, followed by any examples, and then your instructions.

Why "Chain of Thought" prompts are unnecessary for reasoning models

Another important tip for these reasoning models is using delimiters, which are essentially separators for your text. These can include markdown, XML tags, or even section titles. An example of this is shown, where you could provide your context at the top, followed by any examples, and then your instructions.

Using Delimiters for Better Results

How using delimiters can help reasoning models process information more effectively

Next up, we have an interesting tip: try zero-shot first, then few-shot if needed. This means that with these reasoning models, you should first attempt without providing an example, given their capabilities, they're generally good at predicting what kind of text you need.

How using delimiters can help reasoning models process information more effectively

Next up, we have an interesting tip: try zero-shot first, then few-shot if needed. This means that with these reasoning models, you should first attempt without providing an example, given their capabilities, they're generally good at predicting what kind of text you need.

Zero-Shot vs. Few-Shot Prompting

Understanding when to use zero-shot and few-shot prompting with reasoning models

The following two points emphasize being specific, especially when imposing conditions. For instance, if you're setting a budget like $500, be clear about the conditions when asking for a solution under such conditions. It's also important to specify the goal of your prompt.

Understanding when to use zero-shot and few-shot prompting with reasoning models

The following two points emphasize being specific, especially when imposing conditions. For instance, if you're setting a budget like $500, be clear about the conditions when asking for a solution under such conditions. It's also important to specify the goal of your prompt.

Being Specific with Conditions and Goals

The importance of being specific with conditions and goals when prompting reasoning models

Before moving on to the last point, let's revisit the first one, which mentioned that developer messages are replacing system messages. These messages are simply a way of guiding the model. There are system prompts that have been changed with the API requests.

The importance of being specific with conditions and goals when prompting reasoning models

Before moving on to the last point, let's revisit the first one, which mentioned that developer messages are replacing system messages. These messages are simply a way of guiding the model. There are system prompts that have been changed with the API requests.

Revisiting Developer Messages

Understanding the role of developer messages in guiding reasoning models

Now, before we move on to the rest of the documentation that OpenAI released, let me show you an example prompt that works really well with the reasoning model. Credit goes to Alvaro Cintas, whom I found on x.com. Here, you can see that we've structured the prompt into different sections: context, example, and instruction.

Understanding the role of developer messages in guiding reasoning models

Now, before we move on to the rest of the documentation that OpenAI released, let me show you an example prompt that works really well with the reasoning model. Credit goes to Alvaro Cintas, whom I found on x.com. Here, you can see that we've structured the prompt into different sections: context, example, and instruction.

Example Prompt for Reasoning Models

An example of a well-structured prompt for reasoning models

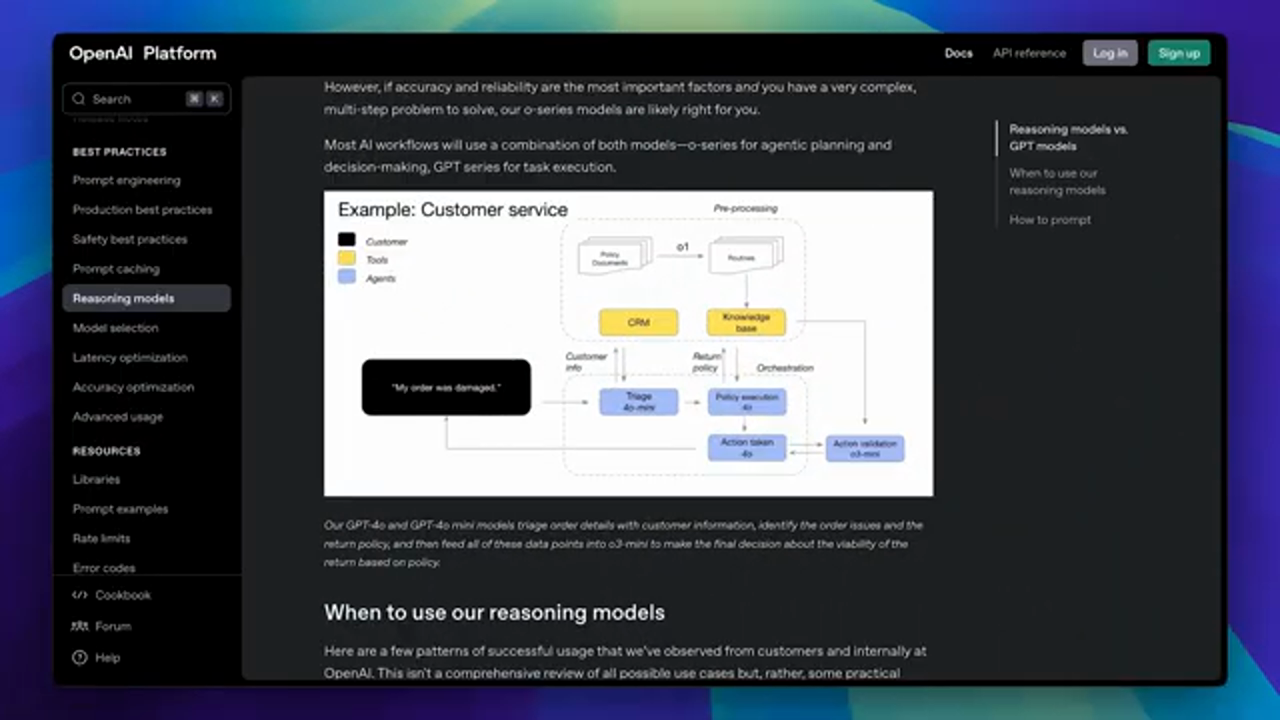

OpenAI has also provided a customer service example to further clarify the difference between their reasoning and non-reasoning GPT models. The reasoning models have been dubbed "planners" while the non-reasoning models are called "workhorses" due to their lower latency and higher efficiency.

An example of a well-structured prompt for reasoning models

OpenAI has also provided a customer service example to further clarify the difference between their reasoning and non-reasoning GPT models. The reasoning models have been dubbed "planners" while the non-reasoning models are called "workhorses" due to their lower latency and higher efficiency.

Reasoning vs. Non-Reasoning Models

Understanding the difference between reasoning and non-reasoning models

They've highlighted when to actually use reasoning models. First, they talk about navigating ambiguous tasks. This basically means that whenever the meaning of your prompt is unclear, instead of making assumptions, these reasoning models actually ask for clarification, ensuring they understand your intent before proceeding.

Understanding the difference between reasoning and non-reasoning models

They've highlighted when to actually use reasoning models. First, they talk about navigating ambiguous tasks. This basically means that whenever the meaning of your prompt is unclear, instead of making assumptions, these reasoning models actually ask for clarification, ensuring they understand your intent before proceeding.

Navigating Ambiguous Tasks

How reasoning models navigate ambiguous tasks

Next, we have multi-step agentic planning, which means that thanks to their step-by-step reasoning, these models can lay out a complete plan from start to finish. That's why they're referred to as "planners." As an agent acts, it's able to delegate different tasks to various LLMs, assigning them based on the level of computational power they require.

How reasoning models navigate ambiguous tasks

Next, we have multi-step agentic planning, which means that thanks to their step-by-step reasoning, these models can lay out a complete plan from start to finish. That's why they're referred to as "planners." As an agent acts, it's able to delegate different tasks to various LLMs, assigning them based on the level of computational power they require.

Multi-Step Agentic Planning

Understanding multi-step agentic planning with reasoning models

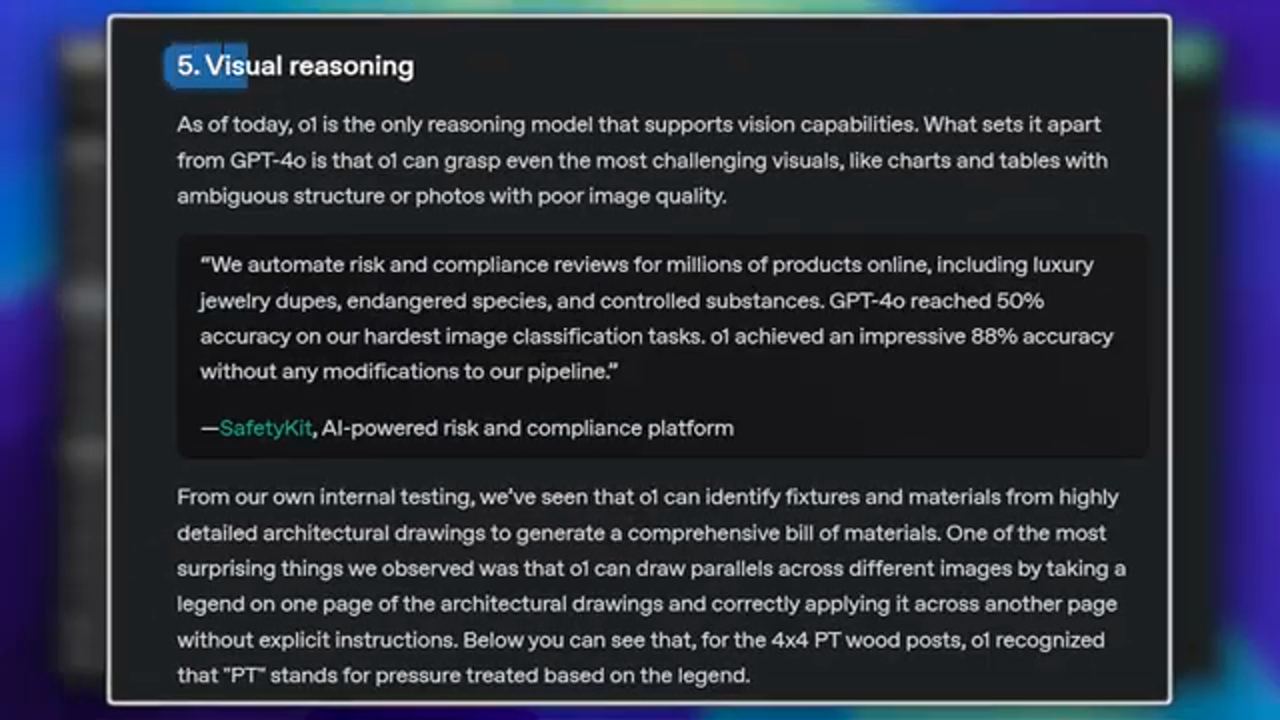

One of the most fascinating use cases for these models is visual reasoning. Since 01 combines both vision and reasoning capabilities, it's able to understand highly detailed architectural drawings. OpenAI even mentioned that it was able to generate a comprehensive bill of materials just by analyzing a complex architectural blueprint.

Understanding multi-step agentic planning with reasoning models

One of the most fascinating use cases for these models is visual reasoning. Since 01 combines both vision and reasoning capabilities, it's able to understand highly detailed architectural drawings. OpenAI even mentioned that it was able to generate a comprehensive bill of materials just by analyzing a complex architectural blueprint.

Visual Reasoning Capabilities

The visual reasoning capabilities of reasoning models

When it comes to code, you might think that these reasoning models are slower or less efficient. However, while non-reasoning models, especially GPT models, might struggle with coding, Claude is much better at it. But with reasoning models, the key advantage is that they make far fewer mistakes. That means you don't have to waste time constantly correcting small errors like you would with non-reasoning models.

The visual reasoning capabilities of reasoning models

When it comes to code, you might think that these reasoning models are slower or less efficient. However, while non-reasoning models, especially GPT models, might struggle with coding, Claude is much better at it. But with reasoning models, the key advantage is that they make far fewer mistakes. That means you don't have to waste time constantly correcting small errors like you would with non-reasoning models.

Coding with Reasoning Models

The advantages of using reasoning models for coding

The advantages of using reasoning models for coding

Conclusion

In conclusion, OpenAI's Reasoning Best Practices guide provides valuable insights into how to effectively use reasoning models for optimal results. By following these prompting techniques, you can get better results from reasoning models. Remember to simplify your prompts, avoid "Chain