The dawn of reinforcement fine-tuning: unlocking the next frontier in AI versatility

Reinforcement fine-tuning (RFT) is making its debut as one of the most significant advancements in AI model customization. Recently showcased in a presentation by OpenAI, this cutting-edge approach promises to revolutionize the way developers, researchers, and enterprises optimize their AI models for specific tasks. Spearheaded by the OpenAI team, the presentation unveiled the power of combining reinforcement learning techniques with customizable fine-tuning, a leap that stands to make frontier AI models such as GPT-4 and the O1 series more adaptable than ever before.

This article dives into the insights from the transcript of the presentation, exploring the why, what, and how of reinforcement fine-tuning, along with its practical applications.

OpenAI's newest innovation: O1 and reinforcement fine-tuning

OpenAI introduces reinforcement fine-tuning with the customizable O1 series models

Mark, a lead researcher at OpenAI, began the session by introducing their newest model series, O1. Recently launched, the O1 series of models boasts a substantial leap in how they approach problem-solving; the models "think" for a while before generating a response, representing a more nuanced decision-making process.

Reinforcement fine-tuning builds on that innovation by enabling developers and organizations to adapt O1 models using their custom datasets—not through traditional fine-tuning, but with reinforcement learning principles.

This advancement isn't merely about imitating existing inputs; instead, it allows models to reason more systematically. By giving the models "space to think," as Mark explained, the approach optimizes reasoning pipelines, enhances generalization, and empowers users to create domain-specific expert models.

Why reinforcement fine-tuning matters

The ability to fine-tune AI models holds immense potential, particularly for organizations handling specialized data. Reinforcement fine-tuning sets itself apart by enabling the development of AI tools that go beyond matching patterns; it allows for logical deducing over data. OpenAI highlighted several key fields where their O1 reinforcement fine-tuning process offers transformative use cases:

- Legal & Finance: Developing domain-specific tools for industries reliant on logic-driven analysis.

- Engineering & Insurance: Task-specific reasoning optimized for structured and systematic thought processes.

- Healthcare & Biology: Supporting researchers in areas like genetic analysis and drug development.

For example, OpenAI’s recent partnership with Thomson Reuters used reinforcement fine-tuning to enhance Co-Counsel AI, a legal assistant equipped to handle complex legal research tasks.

OpenAI's RFT applications suit fields like law, healthcare, and AI safety

These examples underscore RFT's ability to unlock new efficiencies by leveraging reinforcement learning's ability to reward correct reasoning.

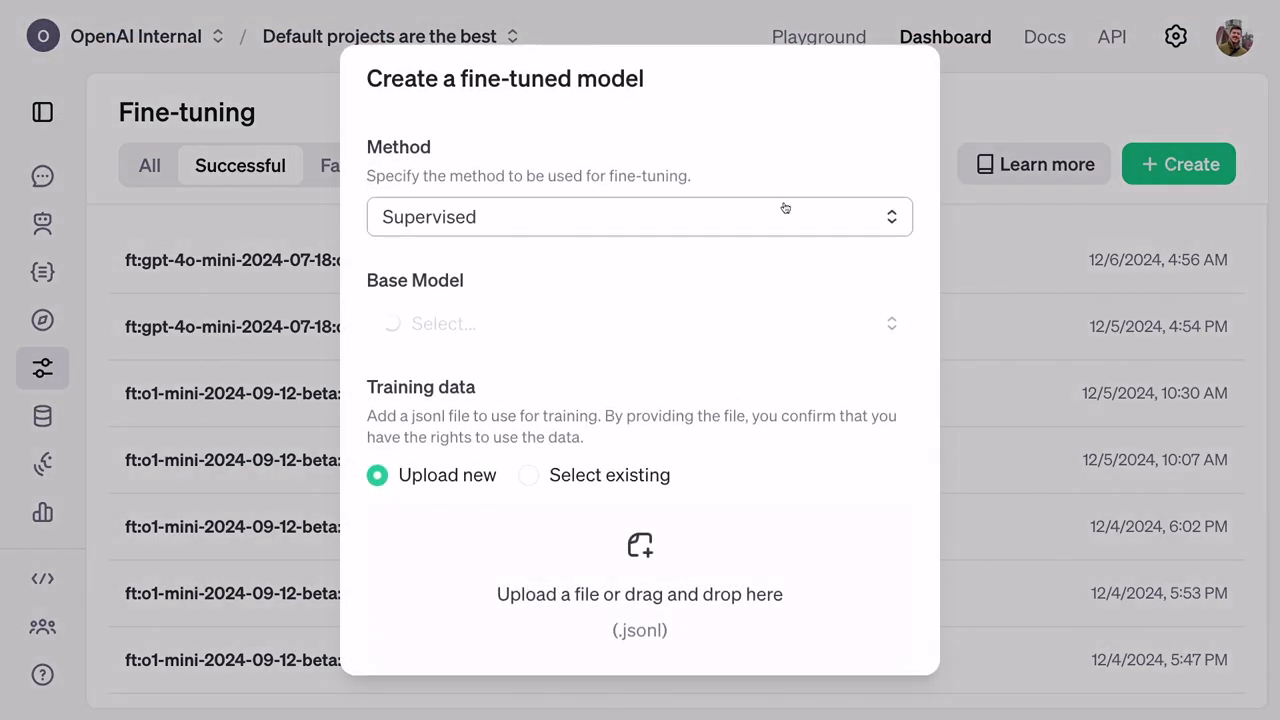

The difference between supervised and reinforcement fine-tuning

Supervised fine-tuning mirrors styles or tones, while RFT teaches AI to logically reason in new ways

In 2022, OpenAI introduced supervised fine-tuning to its API toolkit. While powerful in its own right, supervised fine-tuning focuses on teaching AI to replicate features from input data—useful for changing tone, style, or format but less suited for solving reasoning-based challenges.

In contrast, reinforcement fine-tuning emphasizes teaching models to reason and solve problems. Here’s how:

- Critical thinking space: RFT enables the AI model to pause, assess a problem, and generate logical solutions based on evidence.

- Feedback-driven learning: Correct reasoning paths are rewarded, while flawed rationale is penalized. This feedback guides the model towards greater competence.

- Generalization across domains: By analyzing just a few examples within limited datasets, models trained with RFT learn to reason better over custom domains.

For instance, as discussed in the presentation, instructing models using biomedical data resulted in noticeable improvements after exposing them to just a few dozen training examples.

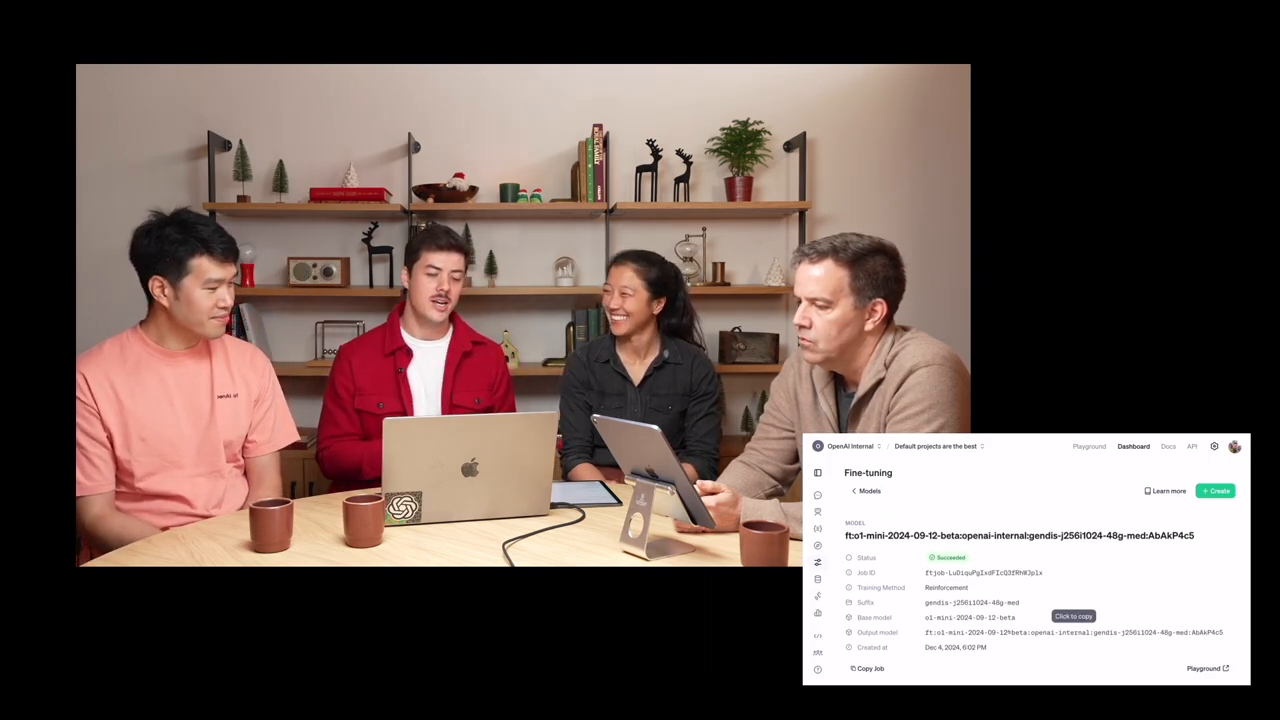

Scientific adoption: exploring genetics with reinforcement fine-tuning

OpenAI and researchers collaborate to study genetic illnesses using reinforcement fine-tuning

One of the most exciting domains where reinforcement fine-tuning is currently being applied is scientific research. Berkeley Lab computational biologist Justin Reese shared his experience in leveraging OpenAI's models to explore rare genetic diseases.

Rare diseases may individually seem uncommon, yet collectively impact over 300 million people globally. Diagnosing these conditions can often take months or years due to the specialized knowledge required. By merging reinforcement fine-tuning with datasets extracted from:

- Medical case reports

- Lists of symptoms (both present and absent)

- Genetic markers causing specific diseases

Justin and his team have crafted an AI model capable of systematically reasoning about genetic mutations, thereby speeding up research workflows and enhancing diagnostic accuracy.

This multidisciplinary collaboration—spanning Berkeley Lab, Charité Hospital in Germany, and global medical initiatives—exemplifies RFT’s transformative potential.

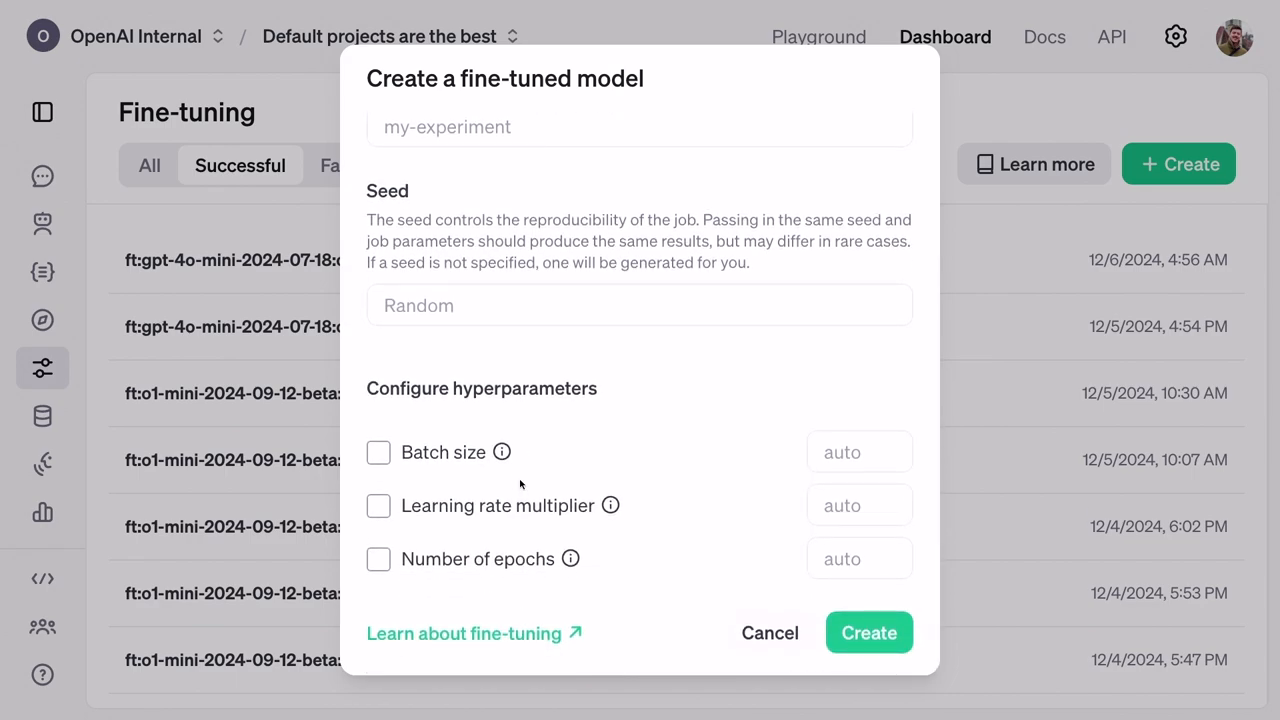

Step-by-step: how reinforcement fine-tuning works

Model training begins by uploading custom datasets paired with evaluation criteria (graders)

Reinforcement fine-tuning operates through a structured process:

Dataset preparation: Training requires JSONL files containing customized examples. These include inputs, instructions, and answers but hide actual results during model training to avoid cheating.

Validation: During training, models are also evaluated on a separate validation dataset to ensure AI doesn't merely memorize answers but applies genuine reasoning.

Graders for scoring: A unique concept in RFT, "graders" are evaluation metrics that assign scores to predictions, rewarding correct answers while discouraging wrong patterns.

Training infrastructure: Running on OpenAI’s distributed training systems, reinforcement fine-tuning makes cutting-edge techniques available to users without requiring direct engineering expertise.

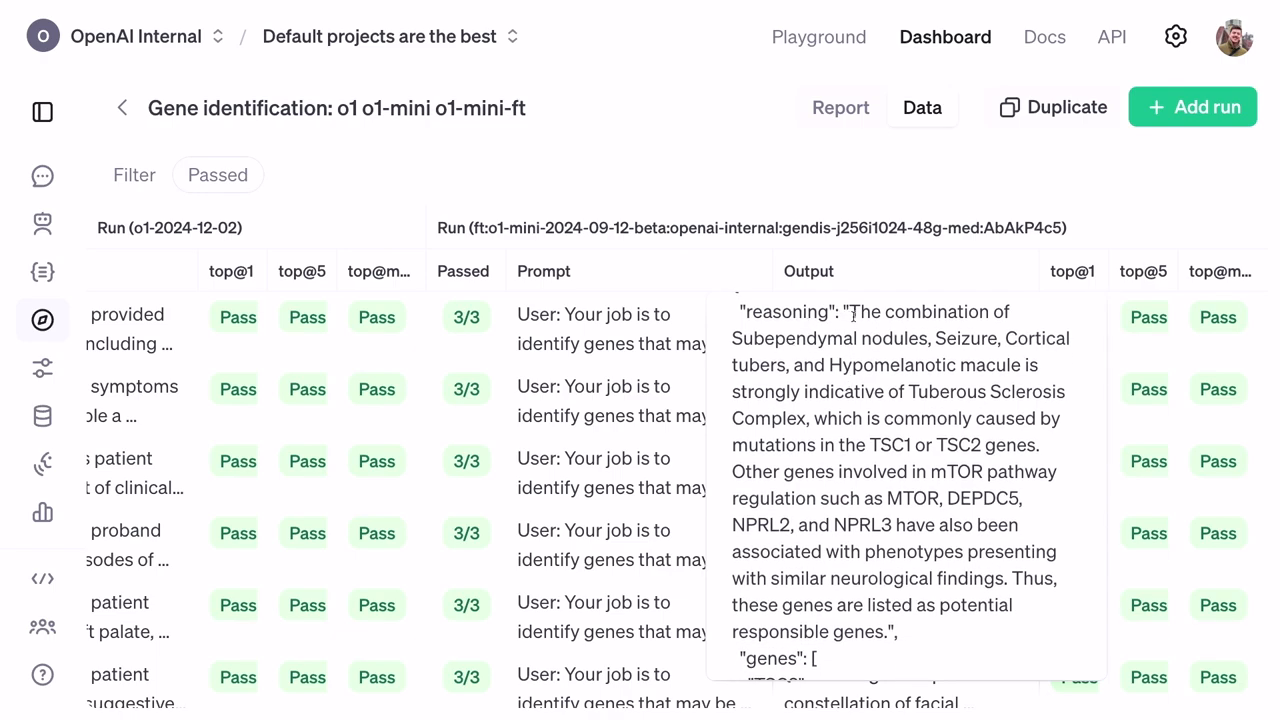

Performance measurement: Post-training evaluations assess improved model accuracy by analyzing statistical metrics such as top-at-1 and top-at-5 rankings, showcasing the fine-tuned model's robustness.

Fine-tuning improves accuracy for identifying responsible genes using hierarchical rankings.

Real-world implications: performance results & reasoning

Validation-based scores demonstrate the finer reasoning abilities unlocked post-tuning.

With their reinforcement fine-tuned models outperforming traditional settings, the results demonstrated how even smaller and cheaper models can ultimately exceed more resource-intensive baselines. The improvement in accuracy was especially evident in O1 Mini’s rankings, which achieved better reasoning and substantially higher success rates for predicting genes based on symptoms.

Additionally, actionable results included explanations for AI decisions, empowering researchers like Justin Reese to explore exact reasoning paths—a crucial feature for life-critical diagnoses.

Looking ahead: reinforcement fine-tuning for broader use cases

OpenAI announces an expanded research program for leveraging reinforcement fine-tuning.

Reinforcement fine-tuning's potential extends far beyond healthcare, as OpenAI demonstrated its flexibility across industries like bioinformatics, AI safety, and legal systems. Meanwhile, OpenAI’s newly expanded Alpha research program opens opportunities for universities and enterprises to pilot reinforcement fine-tuned models within their niche domains.

This initiative invites leaders involved in handling complex tasks to test cutting-edge solutions ahead of public rollout, expected in early 2024.

Conclusion: bridging innovation with domain needs

Reinforcement fine-tuning presents infinite potential for task-specific AI applications

Reinforcement fine-tuning signifies a monumental leap in shaping how AI interacts with specialized tasks, from biology to finance. By blending logical reasoning with expert-tuned data, OpenAI paves the way for AI systems capable of thinking more critically, generalizing intelligently, and solving humanity’s toughest problems.

While RFT's applications are still evolving, its current impact foreshadows a future where adaptable AI systems become indispensable to both academic and industrial sectors. The roadmap remains clear: combine domain expertise with OpenAI’s scalable infrastructure to pioneer solutions for tomorrow's technological frontiers.