VSCode में स्थानीय रूप से होस्ट किए गए AI कोडिंग असिस्टेंट को कैसे चलाएं

इस लेख में, हम जानेंगे कि अपने स्थानीय रूप से स्थापित Large Language Model (LLM) को Continue एक्सटेंशन का उपयोग करके VSCode से कैसे कनेक्ट करें, जिससे आप अपने कोडिंग सेशन को ऑप्टिमाइज़ कर सकें। इस प्रक्रिया को 5 मिनट से भी कम समय में पूरा किया जा सकता है, और हम आपको चरण-दर-चरण मार्गदर्शन करेंगे।

स्थानीय रूप से होस्ट किए गए एआई कोडिंग सहायकों का परिचय

वीडियो का परिचय, ट्यूटोरियल के उद्देश्य की व्याख्या करना

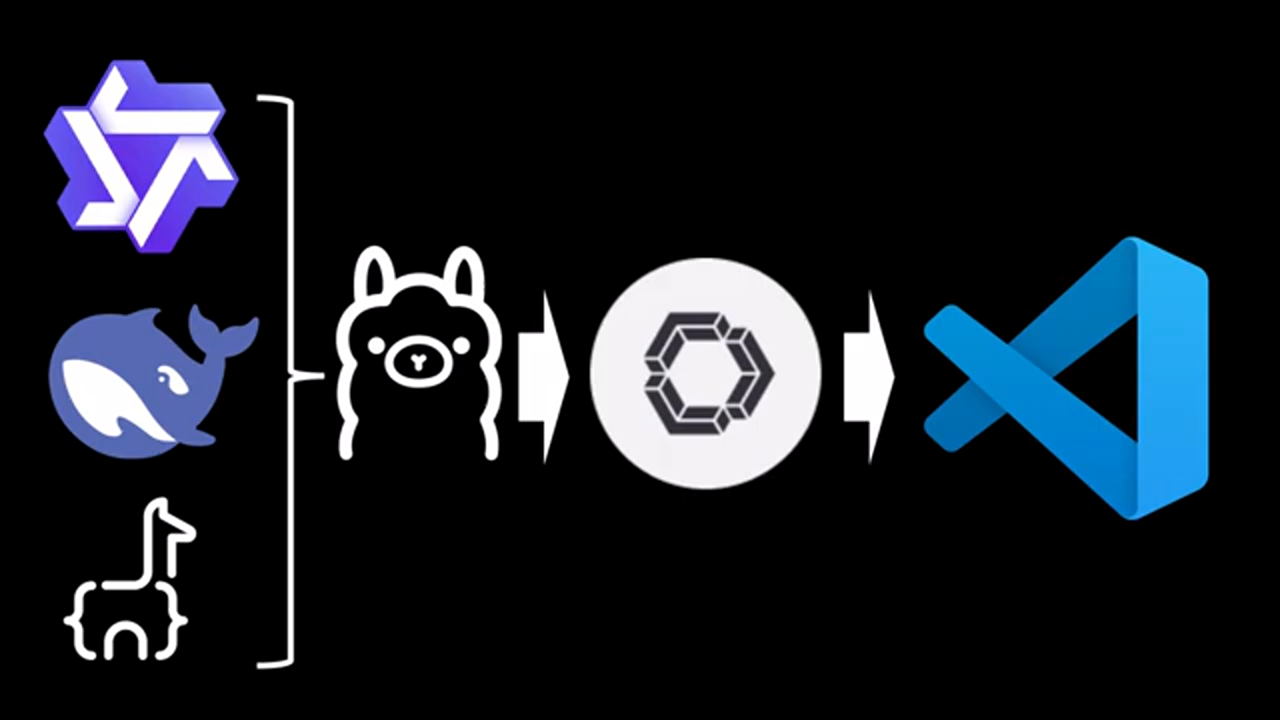

वीडियो स्थानीय रूप से होस्ट किए गए AI कोडिंग सहायकों की अवधारणा और उनके लाभों का परिचय देकर शुरू होता है। स्पीकर बताते हैं कि वे VSCode में स्थानीय रूप से स्थापित LLM को कनेक्ट करने का तरीका दिखाएंगे, जिससे कोडिंग सेशन को ऑप्टिमाइज़ करना संभव हो जाएगा।

वीडियो का परिचय, ट्यूटोरियल के उद्देश्य की व्याख्या करना

वीडियो स्थानीय रूप से होस्ट किए गए AI कोडिंग सहायकों की अवधारणा और उनके लाभों का परिचय देकर शुरू होता है। स्पीकर बताते हैं कि वे VSCode में स्थानीय रूप से स्थापित LLM को कनेक्ट करने का तरीका दिखाएंगे, जिससे कोडिंग सेशन को ऑप्टिमाइज़ करना संभव हो जाएगा।

ट्यूटोरियल के लिए आवश्यकताएँ

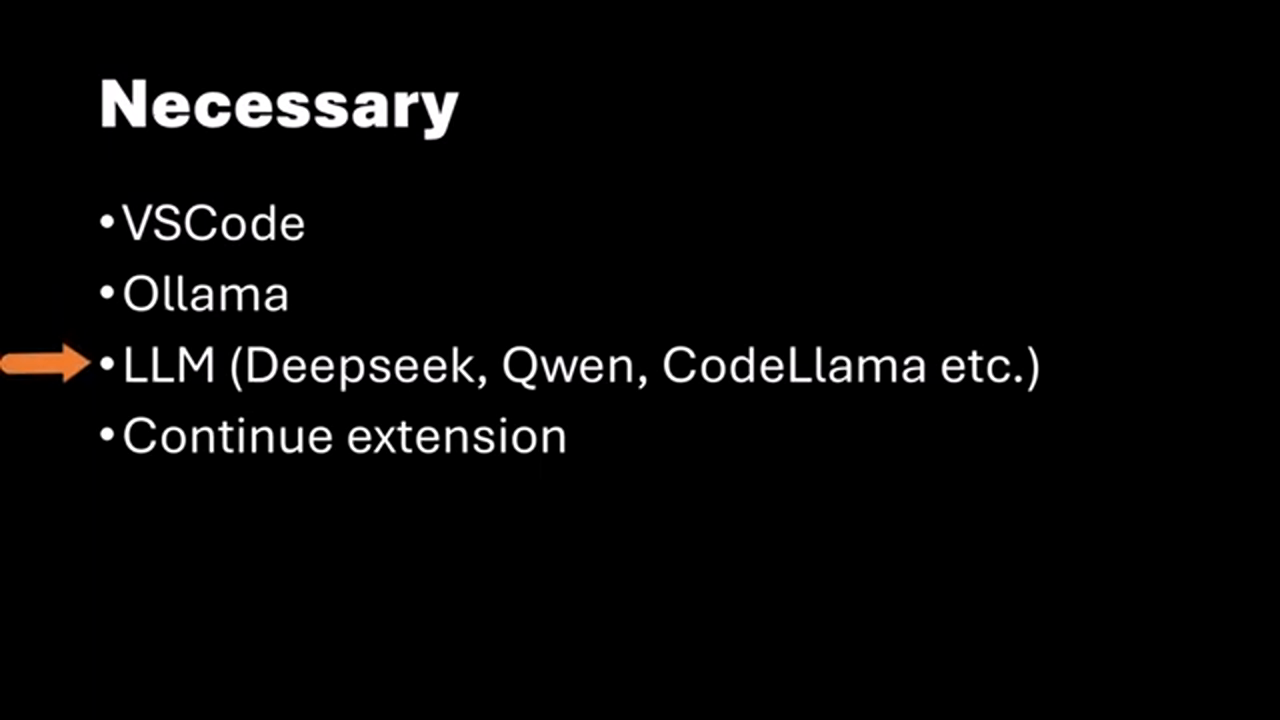

VSCode और LLM इंस्टॉलेशन सहित ट्यूटोरियल का पालन करने के लिए आवश्यकताएँ

इस ट्यूटोरियल का पालन करने के लिए, आपके मशीन पर VSCode इंस्टॉल होना आवश्यक है। इसके अतिरिक्त, आपके पास स्थानीय रूप से स्थापित LLM, जैसे Alama होना चाहिए, और इसे रनिंग होना चाहिए | यदि आप नहीं जानते कि Alama और LLM को कैसे इंस्टॉल किया जाए, तो स्पीकर एक पिछले वीडियो का उल्लेख करते हैं जो 10 मिनट में प्रक्रिया को समझाता है।

VSCode और LLM इंस्टॉलेशन सहित ट्यूटोरियल का पालन करने के लिए आवश्यकताएँ

इस ट्यूटोरियल का पालन करने के लिए, आपके मशीन पर VSCode इंस्टॉल होना आवश्यक है। इसके अतिरिक्त, आपके पास स्थानीय रूप से स्थापित LLM, जैसे Alama होना चाहिए, और इसे रनिंग होना चाहिए | यदि आप नहीं जानते कि Alama और LLM को कैसे इंस्टॉल किया जाए, तो स्पीकर एक पिछले वीडियो का उल्लेख करते हैं जो 10 मिनट में प्रक्रिया को समझाता है।

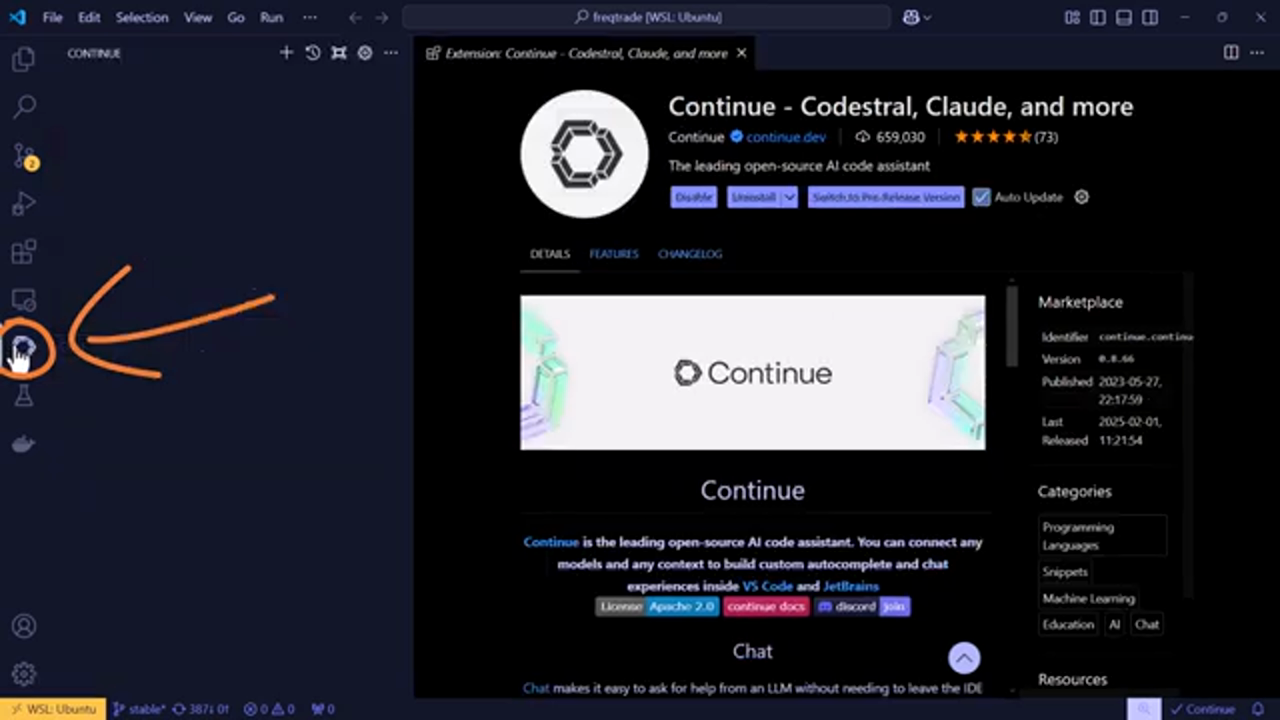

Continue एक्सटेंशन स्थापित करना

VSCode में Continue एक्सटेंशन स्थापित करना

यह सुनिश्चित करने के बाद कि आपके पास VSCode और स्थानीय रूप से स्थापित LLM है, आपको Continue एक्सटेंशन इंस्टॉल करना होगा। यह एक्सटेंशन आपको अपने LLM को VSCode से कनेक्ट करने की अनुमति देता है। स्पीकर आपको Continue एक्सटेंशन को खोजने और इंस्टॉल करने की प्रक्रिया के माध्यम से मार्गदर्शन करता है।

VSCode में Continue एक्सटेंशन स्थापित करना

यह सुनिश्चित करने के बाद कि आपके पास VSCode और स्थानीय रूप से स्थापित LLM है, आपको Continue एक्सटेंशन इंस्टॉल करना होगा। यह एक्सटेंशन आपको अपने LLM को VSCode से कनेक्ट करने की अनुमति देता है। स्पीकर आपको Continue एक्सटेंशन को खोजने और इंस्टॉल करने की प्रक्रिया के माध्यम से मार्गदर्शन करता है।

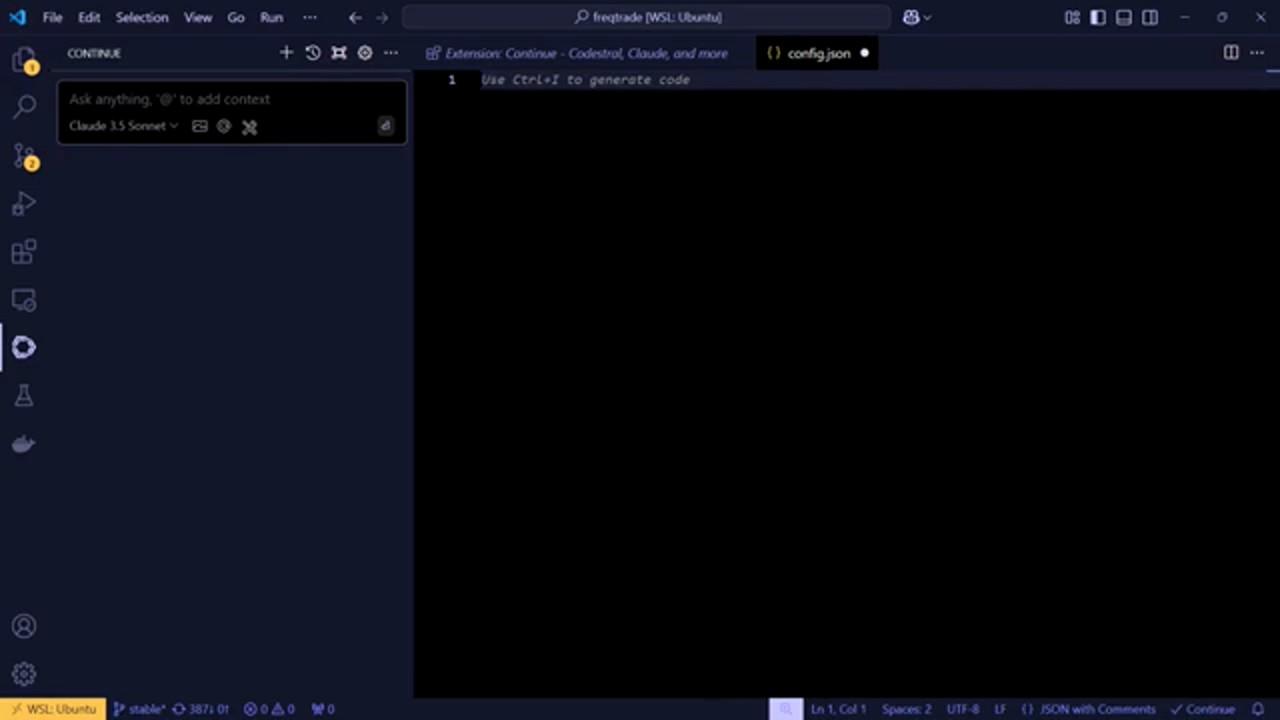

Continue एक्सटेंशन कॉन्फ़िगर करना

स्थानीय रूप से स्थापित LLM के साथ काम करने के लिए Continue एक्सटेंशन कॉन्फ़िगर करना

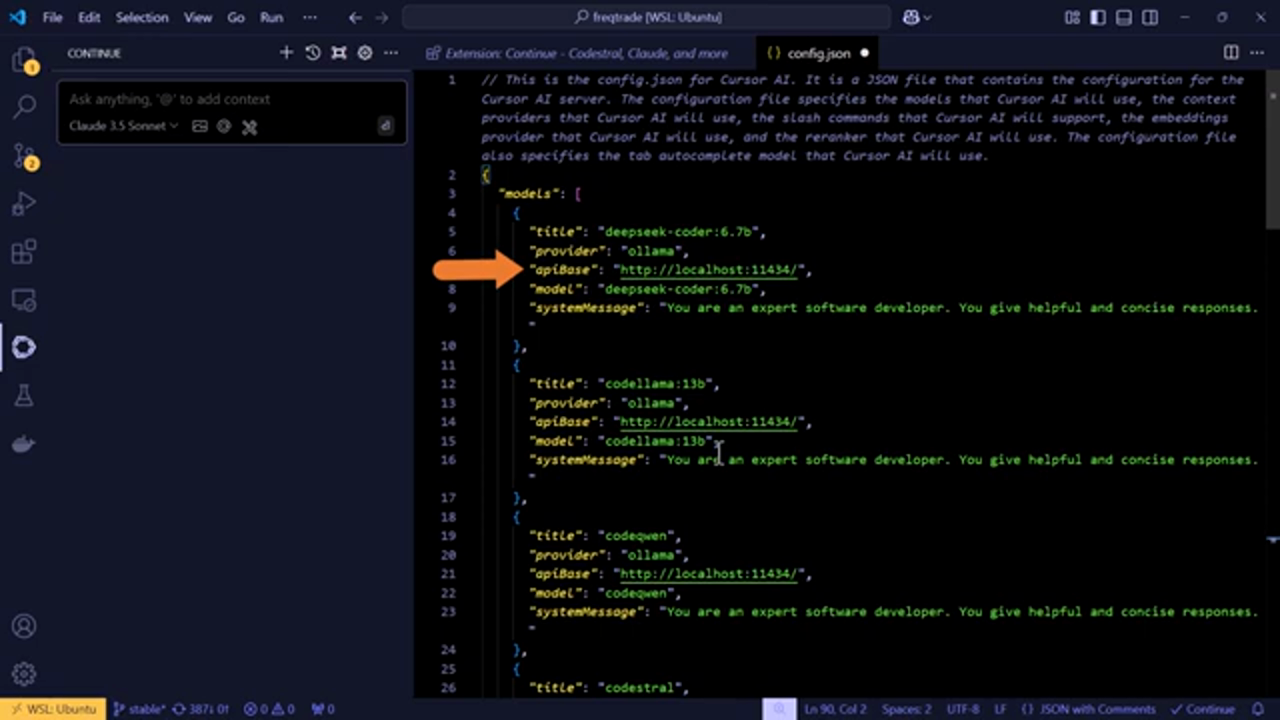

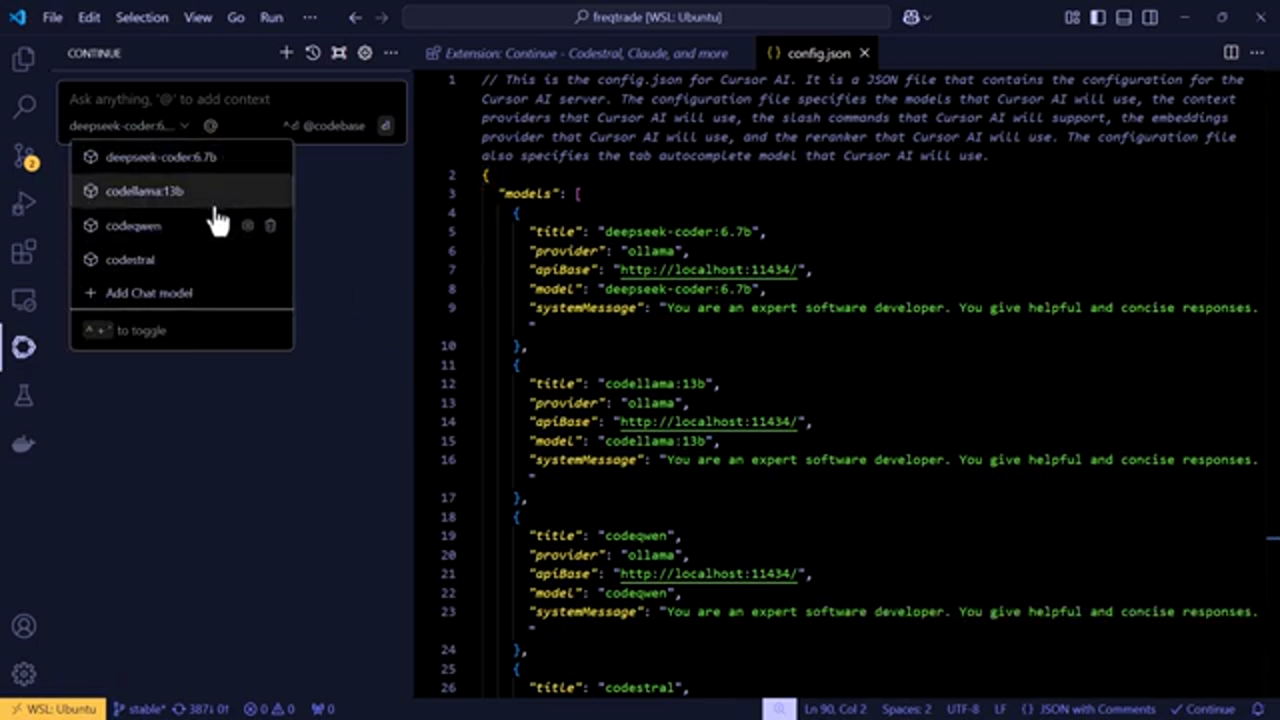

एक बार Continue एक्सटेंशन इंस्टॉल हो जाने के बाद, आपको इसे अपने स्थानीय रूप से स्थापित LLM के साथ काम करने के लिए कॉन्फ़िगर करना होगा। स्पीकर बताते हैं कि Continue एक्सटेंशन की config.js फ़ाइल को कैसे खोलें और अपनी LLM सेटिंग्स कैसे जोड़ें। इसमें आपके LLM सर्वर पर इंस्टॉल किए गए मॉडल्स को निर्दिष्ट करना और प्रोवाइडर, आईपी एड्रेस और पोर्ट नंबर को कॉन्फ़िगर करना शामिल है।

स्थानीय रूप से स्थापित LLM के साथ काम करने के लिए Continue एक्सटेंशन कॉन्फ़िगर करना

एक बार Continue एक्सटेंशन इंस्टॉल हो जाने के बाद, आपको इसे अपने स्थानीय रूप से स्थापित LLM के साथ काम करने के लिए कॉन्फ़िगर करना होगा। स्पीकर बताते हैं कि Continue एक्सटेंशन की config.js फ़ाइल को कैसे खोलें और अपनी LLM सेटिंग्स कैसे जोड़ें। इसमें आपके LLM सर्वर पर इंस्टॉल किए गए मॉडल्स को निर्दिष्ट करना और प्रोवाइडर, आईपी एड्रेस और पोर्ट नंबर को कॉन्फ़िगर करना शामिल है।

अपनी LLM सेटिंग्स जोड़ना

config.js फ़ाइल में अपनी LLM सेटिंग्स जोड़ना

स्पीकर config.js फ़ाइल में अपनी LLM सेटिंग्स जोड़ने के तरीके की विस्तृत व्याख्या प्रदान करता है। इसमें मॉडल नाम, सिस्टम मैसेज और Continue एक्सटेंशन के लिए आपके LLM के साथ काम करने के लिए आवश्यक अन्य कॉन्फ़िगरेशन शामिल हैं।

config.js फ़ाइल में अपनी LLM सेटिंग्स जोड़ना

स्पीकर config.js फ़ाइल में अपनी LLM सेटिंग्स जोड़ने के तरीके की विस्तृत व्याख्या प्रदान करता है। इसमें मॉडल नाम, सिस्टम मैसेज और Continue एक्सटेंशन के लिए आपके LLM के साथ काम करने के लिए आवश्यक अन्य कॉन्फ़िगरेशन शामिल हैं।

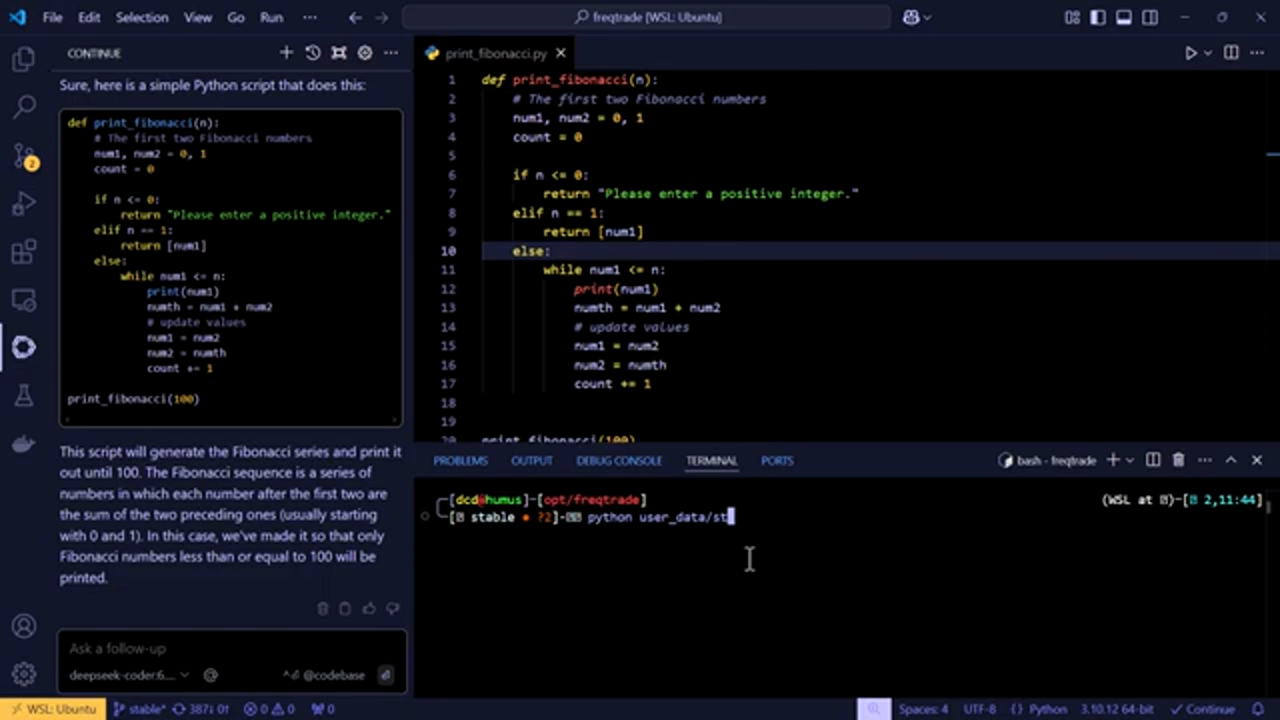

कॉन्फ़िगरेशन का परीक्षण

यह सुनिश्चित करने के लिए कॉन्फ़िगरेशन का परीक्षण करना कि यह अपेक्षा के अनुरूप काम करता है

Continue एक्सटेंशन को कॉन्फ़िगर करने के बाद, स्पीकर LLM को पायथन कोड का एक टुकड़ा उत्पन्न करने के लिए कहकर कॉन्फ़िगरेशन का परीक्षण करता है। LLM सफलतापूर्वक कोड उत्पन्न करता है, यह दर्शाता है कि कॉन्फ़िगरेशन सही है।

यह सुनिश्चित करने के लिए कॉन्फ़िगरेशन का परीक्षण करना कि यह अपेक्षा के अनुरूप काम करता है

Continue एक्सटेंशन को कॉन्फ़िगर करने के बाद, स्पीकर LLM को पायथन कोड का एक टुकड़ा उत्पन्न करने के लिए कहकर कॉन्फ़िगरेशन का परीक्षण करता है। LLM सफलतापूर्वक कोड उत्पन्न करता है, यह दर्शाता है कि कॉन्फ़िगरेशन सही है।

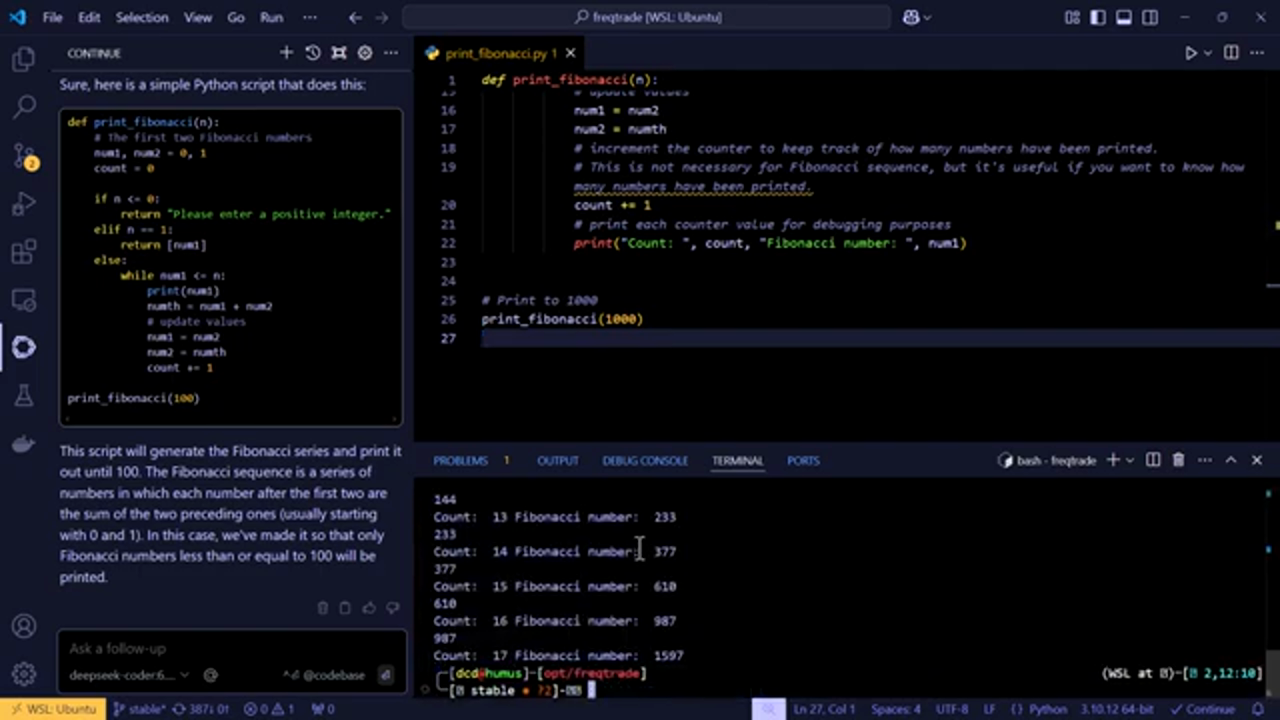

स्वतः पूर्णता और कोड जनरेशन

LLM का उपयोग करके स्वतः पूर्णता और कोड जनरेशन

स्पीकर LLM की स्वतः पूर्णता सुविधा का भी प्रदर्शन करते हैं, जो आपके टाइप करते ही कोड पूर्णता का सुझाव दे सकता है। इसके अतिरिक्त, LLM एक प्रॉम्प्ट के आधार पर कोड उत्पन्न कर सकता है, जिससे यह कोडिंग के लिए एक शक्तिशाली उपकरण बन जाता है।

LLM का उपयोग करके स्वतः पूर्णता और कोड जनरेशन

स्पीकर LLM की स्वतः पूर्णता सुविधा का भी प्रदर्शन करते हैं, जो आपके टाइप करते ही कोड पूर्णता का सुझाव दे सकता है। इसके अतिरिक्त, LLM एक प्रॉम्प्ट के आधार पर कोड उत्पन्न कर सकता है, जिससे यह कोडिंग के लिए एक शक्तिशाली उपकरण बन जाता है।

निष्कर्ष और अगले चरण

VSCode के साथ LLM का उपयोग करने के लिए निष्कर्ष और अगले चरण

स्पीकर Continue एक्सटेंशन का उपयोग करके VSCode में स्थानीय रूप से स्थापित LLM को कनेक्ट करने के लिए उठाए गए कदमों का सारांश देकर ट्यूटोरियल का समापन करते हैं। वे VSCode के साथ LLM का उपयोग करने के लिए अगले चरण भी प्रदान करते हैं, जिसमें स्वतः पूर्णता सुविधा की खोज और कोड जनरेट करना शामिल है।

VSCode के साथ LLM का उपयोग करने के लिए निष्कर्ष और अगले चरण

स्पीकर Continue एक्सटेंशन का उपयोग करके VSCode में स्थानीय रूप से स्थापित LLM को कनेक्ट करने के लिए उठाए गए कदमों का सारांश देकर ट्यूटोरियल का समापन करते हैं। वे VSCode के साथ LLM का उपयोग करने के लिए अगले चरण भी प्रदान करते हैं, जिसमें स्वतः पूर्णता सुविधा की खोज और कोड जनरेट करना शामिल है।

अंतिम विचार और भविष्य की योजनाएँ

VSCode के साथ LLM का उपयोग करने के लिए अंतिम विचार और भविष्य की योजनाएँ

स्पीकर VSCode के साथ स्थानीय रूप से स्थापित LLM का उपयोग करने की क्षमता पर अपने अंतिम विचार साझा करते हैं, जिसमें एक अलग रैक सिस्टम जोड़ने की संभावना भी शामिल है। वे दर्शकों को भविष्य के ट्यूटोरियल के लिए अपने विचार और सुझाव साझा करने के लिए भी आमंत्रित करते हैं।

VSCode के साथ LLM का उपयोग करने के लिए अंतिम विचार और भविष्य की योजनाएँ

स्पीकर VSCode के साथ स्थानीय रूप से स्थापित LLM का उपयोग करने की क्षमता पर अपने अंतिम विचार साझा करते हैं, जिसमें एक अलग रैक सिस्टम जोड़ने की संभावना भी शामिल है। वे दर्शकों को भविष्य के ट्यूटोरियल के लिए अपने विचार और सुझाव साझा करने के लिए भी आमंत्रित करते हैं।

निष्कर्ष और कॉल टू एक्शन

निष्कर्ष और कॉल टू एक्शन, अतिरिक्त संसाधनों के लिंक सहित

स्पीकर दर्शकों को देखने के लिए धन्यवाद कहकर और वीडियो को लाइक, सब्सक्राइब और कमेंट करने के लिए आमंत्रित करके वीडियो का समापन करते हैं। वे अपने GitHub रिपॉजिटरी और सोशल मीडिया चैनलों सहित अतिरिक्त संसाधनों के लिंक भी प्रदान करते हैं।

निष्कर्ष और कॉल टू एक्शन, अतिरिक्त संसाधनों के लिंक सहित

स्पीकर दर्शकों को देखने के लिए धन्यवाद कहकर और वीडियो को लाइक, सब्सक्राइब और कमेंट करने के लिए आमंत्रित करके वीडियो का समापन करते हैं। वे अपने GitHub रिपॉजिटरी और सोशल मीडिया चैनलों सहित अतिरिक्त संसाधनों के लिंक भी प्रदान करते हैं।