Sự trỗi dậy của DeepSeek R1: Một kỷ nguyên mới trong AI

Sự ra mắt gần đây của DeepSeek R1 đã gây chấn động trong cộng đồng AI, với nhiều người ca ngợi nó như một yếu tố thay đổi cuộc chơi. Nhưng công nghệ mới này có ý nghĩa gì đối với tương lai của AI và nó so sánh với các mô hình hiện có như thế nào?

Giới thiệu về DeepSeek R1

Giới thiệu về DeepSeek R1, một startup AI mới đã tạo ra một mô hình open weights mới có tên là R1

Giới thiệu về DeepSeek R1, một startup AI mới đã tạo ra một mô hình open weights mới có tên là R1

DeepSeek R1 là một startup AI mới đã tạo ra một mô hình open weights mới có tên là R1, được cho là đánh bại các mô hình tốt nhất của Open AI trong hầu hết các số liệu. Thành tích này thậm chí còn ấn tượng hơn khi DeepSeek R1 có thể hoàn thành kỳ tích này với ngân sách tương đối thấp là 6 triệu đô la, sử dụng GPU chạy ở một nửa băng thông bộ nhớ của Pony Stark của Open AI.

Ý nghĩa của DeepSeek R1

Ý nghĩa của DeepSeek R1, có thể chắt lọc các mô hình khác để làm cho chúng chạy tốt hơn trên phần cứng chậm hơn

Ý nghĩa của DeepSeek R1, có thể chắt lọc các mô hình khác để làm cho chúng chạy tốt hơn trên phần cứng chậm hơn

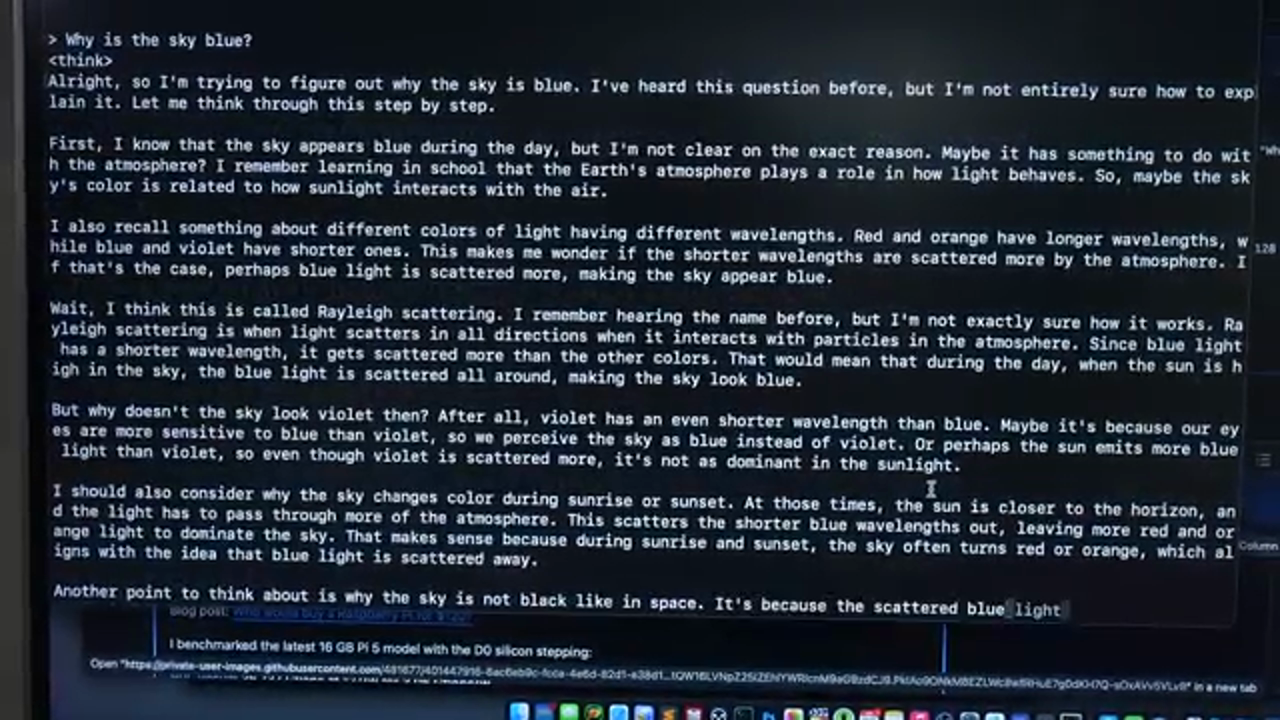

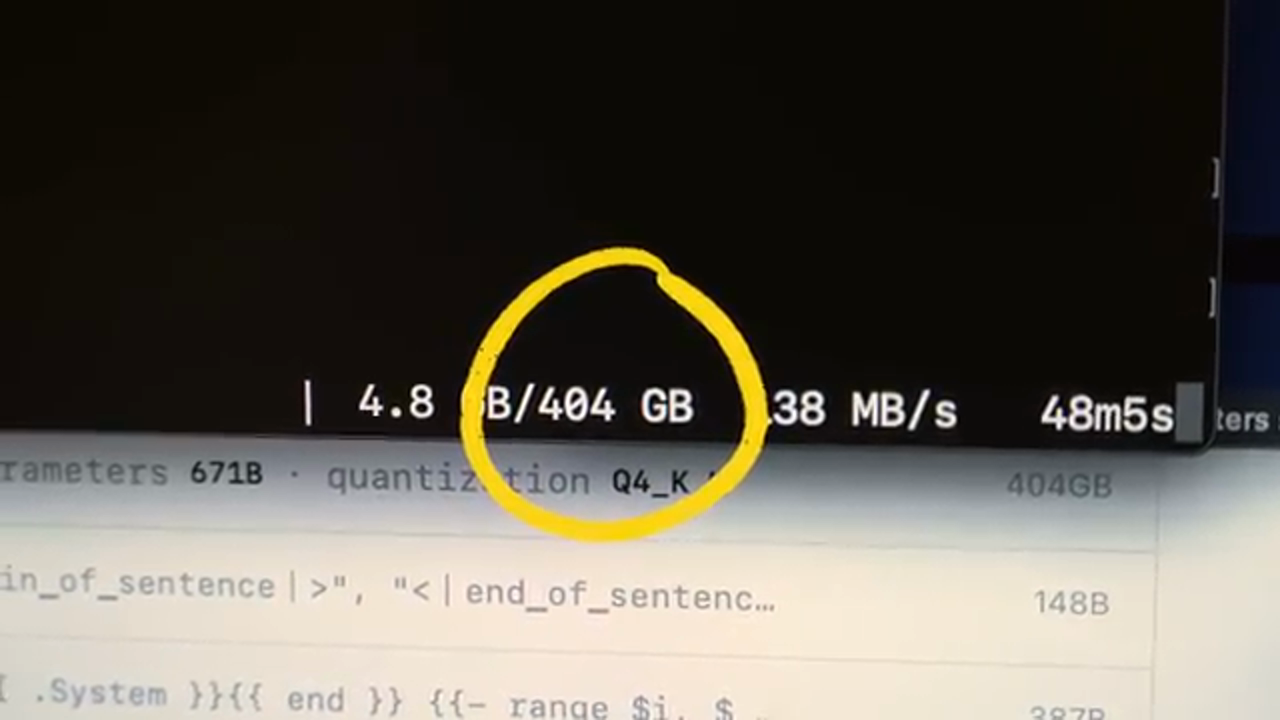

Ý nghĩa của DeepSeek R1 nằm ở khả năng chắt lọc các mô hình khác, giúp chúng chạy tốt hơn trên phần cứng chậm hơn. Điều này có nghĩa là ngay cả một Raspberry Pi cũng có thể chạy một trong những mô hình Quen AI cục bộ tốt nhất, đây là một thành tích đáng kể. Tuy nhiên, điều quan trọng cần lưu ý là Raspberry Pi có thể chạy DeepSeek R1 về mặt kỹ thuật, nhưng nó không giống như chạy mô hình 671b đầy đủ, đòi hỏi một lượng lớn GPU compute.

Chạy DeepSeek R1 trên Raspberry Pi

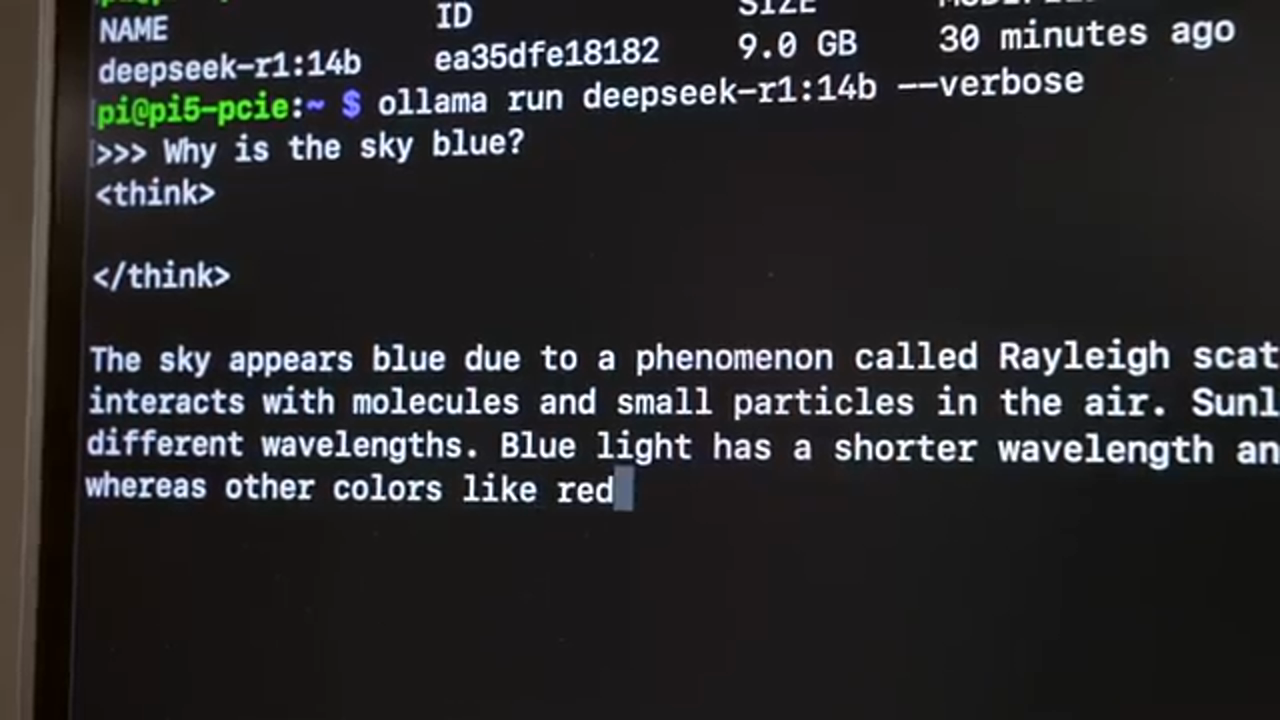

Chạy DeepSeek R1 trên Raspberry Pi, có thể chạy mô hình 14b nhưng không phải mô hình 671b đầy đủ

Chạy DeepSeek R1 trên Raspberry Pi, có thể chạy mô hình 14b nhưng không phải mô hình 671b đầy đủ

Có thể chạy DeepSeek R1 trên Raspberry Pi, nhưng điều quan trọng là phải hiểu những hạn chế. Mô hình 14b có thể chạy trên Raspberry Pi, nhưng nó sẽ không giành được bất kỳ kỷ lục tốc độ nào. Kiểm tra một vài lời nhắc khác nhau, Raspberry Pi có thể đạt được khoảng 1,2 token mỗi giây, đủ cho các tác vụ đơn giản như sửa lỗi "rubber duck" hoặc tạo ý tưởng cho tiêu đề YouTube.

Tầm quan trọng của GPU

Tầm quan trọng của GPU trong việc chạy DeepSeek R1, có thể cải thiện đáng kể hiệu suất

Tầm quan trọng của GPU trong việc chạy DeepSeek R1, có thể cải thiện đáng kể hiệu suất

GPU đóng một vai trò quan trọng trong việc chạy DeepSeek R1, vì chúng có thể cải thiện đáng kể hiệu suất. Với một card đồ họa bên ngoài, Raspberry Pi có thể đạt được tốc độ nhanh hơn nhiều, khoảng 20-50 token mỗi giây, tùy thuộc vào loại công việc đang được thực hiện. Điều này là do GPU và VRAM của chúng nhanh hơn nhiều so với CPU và RAM hệ thống.

Chạy DeepSeek R1 trên Phần Cứng Khác

Chạy DeepSeek R1 trên phần cứng khác, chẳng hạn như máy chủ 192 lõi, có thể đạt được khoảng 4 token mỗi giây

Chạy DeepSeek R1 trên phần cứng khác, chẳng hạn như máy chủ 192 lõi, có thể đạt được khoảng 4 token mỗi giây

DeepSeek R1 cũng có thể được chạy trên phần cứng khác, chẳng hạn như máy chủ 192 lõi, có thể đạt được khoảng 4 token mỗi giây. Máy chủ này có giá cả phải chăng hơn so với thiết lập GPU cao cấp và chỉ tiêu thụ khoảng 800 watt điện, khiến nó trở thành một tùy chọn dễ tiếp cận hơn cho những người quan tâm đến việc chạy DeepSeek R1.

Tương Lai Của AI và GPU

Tương lai của AI và GPU, với GPU AMD hoạt động tốt, trình điều khiển nguồn mở Intel hoạt động phần nào và Nvidia có khả năng tham gia cuộc chiến

Tương lai của AI và GPU, với GPU AMD hoạt động tốt, trình điều khiển nguồn mở Intel hoạt động phần nào và Nvidia có khả năng tham gia cuộc chiến

Tương lai của AI và GPU có vẻ đầy hứa hẹn, với GPU AMD hoạt động tốt, trình điều khiển nguồn mở Intel hoạt động phần nào và Nvidia có khả năng tham gia cuộc chiến. Điều này có nghĩa là sẽ có nhiều tùy chọn hơn cho những người quan tâm đến việc chạy các mô hình AI trên phần cứng của họ và chúng ta có thể mong đợi sẽ thấy những cải tiến đáng kể về hiệu suất và khả năng tiếp cận.

Bong Bóng AI

Bong bóng AI, với việc Nvidia mất hơn nửa nghìn tỷ đô la giá trị trong một ngày, nhưng vẫn có giá cổ phiếu cao hơn gấp tám lần so với năm 2023

Bong bóng AI, với việc Nvidia mất hơn nửa nghìn tỷ đô la giá trị trong một ngày, nhưng vẫn có giá cổ phiếu cao hơn gấp tám lần so với năm 2023

Bong bóng AI vẫn còn rất sống động, với việc Nvidia mất hơn nửa nghìn tỷ đô la giá trị trong một ngày sau khi ra mắt DeepSeek R1. Tuy nhiên, giá cổ phiếu của họ vẫn cao hơn gấp tám lần so với năm 2023, cho thấy rằng sự cường điệu xung quanh AI vẫn còn rất lớn. Mặc dù vậy, có một số điểm tích cực, chẳng hạn như nhận ra rằng chúng ta không cần phải dành một lượng lớn tài nguyên năng lượng để đào tạo và chạy các mô hình AI.

Kết luận

Sự trỗi dậy của DeepSeek R1 đánh dấu một kỷ nguyên mới trong AI, với những tác động đáng kể đến tương lai của công nghệ. Mặc dù vẫn còn nhiều thách thức phải vượt qua, nhưng tiềm năng để AI cải thiện và trở nên dễ tiếp cận hơn là rất lớn. Khi chúng ta tiến về phía trước, điều quan trọng là phải tách biệt sự cường điệu với thực tế và tập trung vào việc phát triển các mô hình AI thiết thực, hiệu quả và dễ tiếp cận với tất cả mọi người.